When we announced the new conference FORC (Foundations of Responsible Computing) we really had no idea what kind of papers folks would send us.

Fortunately, we got a really high quality set of submissions, from which we have accepted the papers that will make up the program of the inaugural FORC. Check out the accepted papers here: https://responsiblecomputing.org/accepted-papers/

Sunday, March 29, 2020

Monday, February 17, 2020

Fair Prediction with Endogenous Behavior

Can Game Theory Help Us Choose Among Fairness Constraints?

This blog post is about a new paper, joint with Christopher Jung, Sampath Kannan, Changhwa Lee, Mallesh M. Pai, and Rakesh Vohra.

A lot of the recent boom in interest in fairness in machine learning can be traced back to the 2016 Propublica article Machine Bias. To summarize what you will already know if you have interacted with the algorithmic fairness literature at all --- Propublica discovered that the COMPAS recidivism prediction instrument (used to inform bail and parole decisions by predicting whether individuals would go on to commit violent crimes if released) made errors of different sorts on different populations. The false positive rate (i.e. the rate at which it incorrectly labeled people "high risk") was much higher on the African American population than on the white population, and the false negative rate (i.e. the rate at which it incorrectly labeled people as "low risk") was much higher on the white population. Because being falsely labeled high risk is harmful (it decreases the chance you are released), this was widely and reasonably viewed as unfair.

But the story wasn't so simple. Northpointe, the company that produced COMPAS (They have since changed their name) responded by pointing out that their instrument satisfied predictive parity across the two populations --- i.e. that the positive predictive value of their instrument was roughly the same for both white and African American populations. This means that their predictions conveyed the same meaning across the two populations: the people that COMPAS predicted were high risk had roughly the same chance of recidivating, on average, whether or not they were black or white. This is also desirable, because if we use an instrument that produces predictions whose meanings differ according to an individual's demographic group, then we are explicitly incentivizing judges to make decisions based on race, after they are shown the prediction of the instrument. Of course, we now know that simultaneously equalizing false positive rates, false negative rates, and positive predictive values across populations is generically impossible --- i.e. it is impossible except under very special conditions, such as when the underlying crime rate is exactly the same in both populations. This follows from thinking about Bayes Rule.

Another sensible notion of fairness suggests that "similarly risky people should be treated similarly". This harkens back to notions of individual fairness, and suggests that we should do something like the following: we should gather as much information about an individual as we possibly can, and condition on all of it to find a (hopefully correct) posterior belief that they will go on to commit a crime. Then, we should make incarceration decisions by subjecting everyone to the same threshold on these posterior beliefs --- any individual who crosses some uniform threshold should be incarcerated; anyone who doesn't cross the threshold should not be. This is the approach that Corbett-Davies and Goel advocate for, and it seems to have a lot going for it. In addition to uniform thresholds feeling fair, its also easy to see that doing this is the Bayes-optimal decision rule to optimize any societal cost function that differently weights the cost of false positives and false negatives. But applying a uniform threshold on posterior distributions unfortunately will generally result in a decision rule that neither equalizes false positive and false negative rates, nor positive predictive value. Similarly, satisfying these other notions of fairness will generally result in a decision rule that is sub-optimal in terms of its predictive performance.

Unfortunately, this leaves us with little guidance --- should we aim to equalize false positive and negative rates (sometime called equalized odds in this literature)? Should we aim to equalize positive predictive value? Or should we aim for using uniform thresholds on posterior beliefs? Should we aim for something else entirely? More importantly, by what means should we aim to make these decisions?

A Game Theoretic Model

One way we can attempt to choose among different fairness "solution concepts" is to try and think about the larger societal effects that imposing a fairness constraint on a classifier will have. This is tricky, of course --- if we don't commit to some model of the world, then different fairness constraints can have either good or bad long term effects, which still doesn't give us much guidance. Of course making modeling assumptions has its own risks: inevitably the model won't match reality, and we should worry that the results that we derive in our stylized model will not tell us anything useful about the real world. Nevertheless, it is worth trying to proceed: all models are wrong, but some are useful. Our goal will be to come up with a clean, simple model, in which results are robust to modelling choices, and the necessary assumptions are clearly identified. Hopefully the result is some nugget of insight that applies outside of the model. This is what we try to do in our new paper with Chris Jung, Sampath Kannan, Changhwa Lee, Mallesh Pai, and Rakesh Vohra. We'll use the language of criminal justice here, but the model is simple enough that you could apply it to a number of other settings of interest in which we need to design binary classification rules.

In our model, individuals make rational choices about whether or not to commit crimes: that is, individuals have some "outside option" (their opportunity for legal employment, for example), some expected monetary benefit of crime, and some dis-utility for being incarcerated. In deciding whether or not to commit a crime, an individual will weigh their expected benefit of committing a crime, compared to taking their outside option ---- and this calculation will involve their risk of being incarcerated if they commit a crime, and also if they do not (since inevitably any policy will both occasionally free the guilty as well as incarcerate the innocent). Different people might make different decisions because their benefits and costs of crime may differ --- for example, some people will have better opportunities for legal employment than others. And in our model, the only way two different populations differ is in their distributions of these benefits and costs. Each person draws, i.i.d. from a distribution corresponding to their group, a type which encodes this outside option value and cost for incarceration. So in our model, populations differ e.g. only in their access to legal employment opportunities, and this is what will underlie any difference in criminal base rates.

As a function of whether each person commits a crime or not, a "noisy signal" is generated. In general, think of higher signals as corresponding to increased evidence of guilt, and so if someone commits a crime, they will tend to draw higher signals than those who don't commit crimes --- but the signals are noisy, so there is no way to perfectly identify the guilty.

Incarceration decisions are made as a function of these noisy signals: society has a choice as to what incarceration rule to choose, and can potentially choose a different rule for different groups. Once an incarceration rule is chosen, this determines each person's incentive to commit crime, which in turn fixes a base rate of crime in each population. In general, base rates will be different across different groups (because outside option distributions differ), so the impossibility of e.g. equalizing false positive rates, false negative rates, and positive predictive value across groups will hold in our setting. Since crime rates in our setting are a function of the incarceration rule we choose, there is a natural objective to consider: finding the policy that minimizes crime.

Lets think about how we might implement different fairness notions in this setting. First, how should we think about posterior probabilities that an individual will commit a crime? Before we see an individual's noisy signal, but after we see his group membership, we can form our prior belief that he has committed a crime --- this is just the base crime rate in his population. After we observe his noisy signal, we can use Bayes rule to calculate a posterior probability that he has committed a crime. So we could apply the "uniform posterior threshold" approach to fairness and use an incarceration rule that would incarcerate an individual exactly when their posterior probability of having committed a crime exceeded some uniform threshold. But note that because crime rates (and hence prior probabilities of crime) will generically differ between populations (because outside option distributions differ), setting the -same- threshold on posterior probability of crime for both groups corresponds to setting different thresholds on the raw noisy signals. This makes sense --- a Bayesian doesn't need as strong evidence to convince her that someone from a high crime group has committed a crime, as she would need to be convinced that someone from a low crime group has committed a crime, because she started off with a higher prior belief about the person from the high crime group. This (as we already know) results in a classification rule that has different false positive rates and false negative rates across groups.

On the other hand, if we want to equalize false positive and false negative rates across groups, we need an incarceration rule that sets the same threshold on raw noisy signals, independently of group. This will of course correspond to setting different thresholds on the posterior probability of crime (i.e. thresholding calibrated risk scores differently for different groups). And this will always be sub-optimal from the point of view of predicting crime --- the Bayes optimal predictor uniformly thresholds posterior probabilities.

Which Notions of Fairness Lead to Desirable Outcomes?

But only one of these solutions is consistent with our social goal of minimizing crime. And its not the Bayes optimal predictor. The crime-minimizing solution is the one that sets different thresholds on posterior probabilities (i.e. uniform thresholds on signals) so as to equalize false positive rates and false negative rates. In other words, to minimize crime, society should explicitly commit to not conditioning on group membership, even when group membership is statistically informative for the goal of predicting crime.

Why? Its because although using demographic information is statistically informative for the goal of predicting crime when base rates differ, it is not something that is under the control of individuals --- they can control their own choices, but not what group they were born into. And making decisions about individuals using information that is not under their control has the effect of distorting their dis-incentive to commit crime --- it ends up providing less of a dis-incentive to individuals from the higher crime group (since they are more likely to be wrongly incarcerated even if they don't commit a crime). And because in our model people are rational actors, minimizing crime is all about managing incentives.

This is our baseline model, and in the paper we introduce a number of extensions, generalizations, and elaborations on the model in order to stress-test it. The conclusions continue to hold in more elaborate and general settings, but at a high level, the key assumptions that are needed to reach them are that:

- The underlying base rates are rationally responsive to the decision rule used by society.

- Signals are observed at the same rates across populations, and

- The signals are conditionally independent of an individual’s group, conditioned on the individual’s decision about whether or not to commit crime.

Here, conditions (2) and (3) are unlikely to hold precisely in most situations, but we show that they can be relaxed in various ways while still preserving the core conclusion.

But more generally, if we are in a setting in which we believe that individual decisions are rationally made in response to the deployed classifier, and yet the deployed classifier does not equalize false positive and negative rates, then this is an indication that either the deployed classifier is sub-optimal (for the purpose of minimizing crime rates), or that one of conditions (2) and (3) fails to hold. Since in fairness relevant settings, the failure of conditions (2) and (3) is itself undesirable, this can be a diagnostic to highlight discriminatory conditions earlier in the pipeline than the final incarceration rule. In particular, if conditions (2) or (3) fail to hold, then imposing technical fairness constraints on a deployed classifier may be premature, and instead attention should be focused on structural differences in the observations that are being fed into the deployed classifier.

Wednesday, October 30, 2019

FORC: A new conference you should know about.

Here is the CFP: https://responsiblecomputing.org/forc-2020-call-for-paper/

FORC 2020: CALL FOR PAPERS

Symposium on Foundations of Responsible Computing

The Symposium on Foundations of Responsible Computing (FORC) is a forum for mathematical research in computation and society writ large. The Symposium aims to catalyze the formation of a community supportive of the application of theoretical computer science, statistics, economics and other relevant analytical fields to problems of pressing and anticipated societal concern.

Important Dates

February 11: Submission Deadline

March 23: Notification to Authors

April 1: Camera Ready Deadline

June 1-3: The conference

March 23: Notification to Authors

April 1: Camera Ready Deadline

June 1-3: The conference

Any mathematical work on computation and society is welcomed, including topics that are not yet well-established and topics that will arise in the future. This includes the investigation of definitions, algorithms and lower bounds, trade-offs, and economic incentives in a variety of areas. A small sample of topics follow: formal approaches to privacy, including differential privacy; fairness and discrimination in machine learning; bias in the formation of, and diffusion in, social networks; electoral processes and allocation of elected representatives (including redistricting).

The inaugural FORC will be held on June 1-3 at the Harvard Center for Mathematical Sciences and Applications (CMSA), and will have its proceedings published by LIPIcs. The program committee will review submissions to ensure a high quality program based on novel, rigorous and significant scientific contributions. Authors of accepted papers will have the option of publishing a 10-page version of their paper in the proceedings, or publishing only a 1-page extended abstract, to facilitate the publication of their work in another venue. 1-page abstracts will appear on the website, but not in the proceedings. The symposium itself will feature a mixture of talks by authors of accepted papers and invited talks.

Authors should upload a PDF of the paper through Easychair: https://easychair.org/conferences/?conf=forc2020. The font size should be at least 11 point and the paper should be formatted in a single column. Beyond these, there are no formatting or length requirements, but reviewers will only be asked to read the first 10 pages of the paper. It is the authors’ responsibility that the main results of the paper and their significance be clearly stated within the first 10 pages. Submissions should include proofs of all central claims, and the committee will put a premium on writing that conveys clearly and in the simplest possible way what the paper is accomplishing. Authors are free to post their paper on arXiv, etc. Future details will appear on the conference website: https://responsiblecomputing.org/.

Steering Committee

Avrim Blum

Cynthia Dwork

Sampath Kannan

Jon Kleinberg

Shafi Goldwasser

Kobbi Nissim

Toni Pitassi

Omer Reingold

Guy Rothblum

Salvatore Ruggieri

Salil Vadhan

Adrian Weller

Cynthia Dwork

Sampath Kannan

Jon Kleinberg

Shafi Goldwasser

Kobbi Nissim

Toni Pitassi

Omer Reingold

Guy Rothblum

Salvatore Ruggieri

Salil Vadhan

Adrian Weller

Program Committee

Yiling Chen, Harvard

Rachel Cummings, Georgia Tech

Anupam Datta, Carnegie Mellon University

Moritz Hardt, UC Berkeley

Nicole Immorlica, Microsoft Research

Michael Kearns, University of Pennsylvania

Katrina Ligett, Hebrew University

Audra McMillan, Boston University and Northeastern

Aaron Roth, University of Pennsylvania (Chair)

Guy Rothblum, Weizmann Institute

Adam Smith, Boston University

Steven Wu, University of Minnesota

Jonathan Ullman, Northeastern

Jenn Wortman Vaughan, Microsoft Research

Suresh Venkatasubramanian, University of Utah

Nisheeth Vishnoi, Yale

James Zou, Stanford

Rachel Cummings, Georgia Tech

Anupam Datta, Carnegie Mellon University

Moritz Hardt, UC Berkeley

Nicole Immorlica, Microsoft Research

Michael Kearns, University of Pennsylvania

Katrina Ligett, Hebrew University

Audra McMillan, Boston University and Northeastern

Aaron Roth, University of Pennsylvania (Chair)

Guy Rothblum, Weizmann Institute

Adam Smith, Boston University

Steven Wu, University of Minnesota

Jonathan Ullman, Northeastern

Jenn Wortman Vaughan, Microsoft Research

Suresh Venkatasubramanian, University of Utah

Nisheeth Vishnoi, Yale

James Zou, Stanford

Tuesday, September 10, 2019

A New Analysis of "Adaptive Data Analysis"

This is a blog post about our new paper, which you can read here: https://arxiv.org/abs/1909.03577

But this guarantee breaks down if the predicates that you want to estimate are chosen in sequence, adaptively. For example, suppose you are trying to fit a machine learning model to data. If you train your first model, estimate its error, and then as a result of the estimate tweak your model and estimate its error again, you are engaging in exactly this kind of adaptivity. If you repeat this many times (as you might when you are tuning hyper-parameters in your model) you could quickly get yourself into big trouble. Of course there is a simple way around this problem: just don't re-use your data. The most naive baseline that gives statistically valid answers is called "data splitting". If you want to test k models in sequence, just randomly partition your data into k equal sized parts, and test each model on a fresh part. The holdout method is just the special case of k = 2. But this "naive" method doesn't make efficient use of data: its data requirements grow linearly with the number of models you want to estimate.

It turns out its possible to do better by perturbing the empirical means with a little bit of noise before you use them: this is what we (Dwork, Feldman, Hardt, Pitassi, Reingold, and Roth --- DFHPRR) showed back in 2014 in this paper, which helped kick off a small subfield known as "adaptive data analysis". In a nutshell, we proved a "transfer theorem" that says the following: if your statistical estimator is simultaneously differentially private and sample accurate --- meaning that with high probability it provides estimates that are close to the empirical means, then it will also be accurate out of sample. When paired with a simple differentially private mechanism for answering queries --- just perturbing their answers with Gaussian noise --- this gave a significant asymptotic improvement in data efficiency over the naive baseline! You can see how well it does in this figure (click to enlarge it):

Well... Hmm. In this figure, we are plotting how many adaptively chosen queries can be answered accurately as a function of dataset size. By "accurately" we have arbitrarily chosen to mean: answers that have confidence intervals of width 0.1 and uniform coverage probability 95%. On the x axis, we've plotted the dataset size n, ranging from 100,000 to about 12 million. And we've plotted two methods: the "naive" sample splitting baseline, and using the sophisticated Gaussian perturbation technique, as analyzed by the bound we proved in the "DFHPRR" paper. (Actually --- a numerically optimized variant of that bound!) You can see the problem. Even with a dataset size in the tens of millions, the sophisticated method does substantially worse than the naive method! You can extrapolate from the curve that the DFHPRR bound will eventually beat the naive bound, but it would require a truly enormous dataset. When I try extending the plot out that far my optimizer runs into numeric instability issues.

There has been improvement since then. In particular, DFHPRR didn't even obtain the best bound asymptotically. It is folklore that differentially private estimates generalize in expectation: the trickier part is to show that they enjoy high probability generalization bounds. This is what we showed in a sub-optimal way in DFHPRR. In 2015, a beautiful paper by Bassily, Nissim, Steinke, Smith, Stemmer, and Ullman (BNSSSU) introduced the amazing "monitor technique" to obtain the asymptotically optimal high probability bound. An upshot of the bound was that the Gaussian mechanism can be used to answer roughly $k = n^2$ queries --- a quadratic improvement over the naive sample splitting mechanism! You can read about this technique in the lecture notes of the adaptive data analysis class Adam Smith and I taught a few years back. Lets see how it does (click to enlarge):

Substantially better! Now we're plotting n from 100,000 up to about only 1.7 million. At this scale, the DFHPRR bound appears to be constant (at essentially 0), whereas the BNSSSU bound clearly exhibits quadratic behavior. It even beats the baseline --- by a little bit, so long as your dataset has somewhat more than a million entries... I should add that what we're plotting here is again a numerically optimized variant of the BNSSSU bound, not the closed-form version from their paper. So maybe not yet a practical technique. The problem is that the monitor argument --- while brilliant --- seems unavoidably to lead to large constant overhead.

Which brings us to our new work (this is joint work with Christopher Jung, Katrina Ligett, Seth Neel, Saeed Sharifi-Malvajerdi, and Moshe Shenfeld). We give a brand new proof of the transfer theorem. It is elementary, and in particular, obtains high probability generalization bounds directly from high probability sample-accuracy bounds, avoiding the need for the monitor argument. I think the proof is the most interesting part --- its simple and (I think) illuminating --- but an upshot is that we get substantially better bounds, even though the improvement is just in the constants (the existing BNSSSU bound is known to be asymptotically tight). Here's the plot with our bound included --- the x-axis is the same, but note the substantially scaled-up y axis (click to enlarge):

Proof Sketch

Ok: on to the proof. Here is the trick. In actuality, the dataset S is first sampled from $\mathcal{P}^n$, and then some data analyst interacts with a differentially private statistical estimator, resulting in some transcript $\pi$ of query answer pairs. But now imagine that after the interaction is complete, S is resampled from $Q_\pi = (\mathcal{P}^n)|\pi$, the posterior distribution on datasets conditioned on $\pi$. If you reflect on this for a moment, you'll notice that this resampling experiment doesn't change the joint distribution on dataset transcript pairs $(S,\pi)$ at all. So if the mechanism promised high probability sample accuracy bounds, it still promises them in this resampling experiment. But lets think about what that means: the mechanism can first commit to some set of answers $a_i$, and promise that with high probability, after S is resampled from $Q_\pi$, $|a_i - \frac{1}{n}\sum_{j=1}^n q_i(S_j)|$ is small. But under the resampling experiment, it is quite likely that the empirical value of the query $\frac{1}{n}\sum_{j=1}^n q_i(S_j)$ will end up being close to its expectation over the posterior: $q_i(Q_{\pi}) = \mathrm{E}_{S \sim Q_{\pi}}[\frac{1}{n}\sum_{j=1}^n q_i(S_j)]$. So the only way that a mechanism can promise high probability sample accuracy is if it actually promises high probability posterior accuracy: i.e. with high probability, for every query $q_i$ that was asked and answered, we must have that $|a_i - q_i(Q_\pi)|$ is small.

That part of the argument was generic --- it didn't use differential privacy at all! But it serves to focus attention on these posterior distributions $Q_\pi$ that our statistical estimator induces. And it turns out its not hard to see that the expected value of queries on posteriors induced by differentially private mechanisms have to be close to their true answers. For $(\epsilon,0)$-differential privacy, it follows almost immediately from the definition. Here is the derivation. Pick your favorite query $q$ and your favorite transcript $\pi$, and write $S_j \sim S$ to denote a uniformly randomly selected element of a dataset $S$:

$$q (Q_\pi) = \sum_{x} q (x) \cdot \Pr_{S \sim \mathcal{P}^n, S_j \sim S} [S_j = x | \pi]= \sum_{x} q (x) \cdot \frac{\Pr [\pi | S_j = x ] \cdot \Pr_{S \sim \mathcal{P}^n, S_j \sim S} [S_j = x]}{\Pr[\pi]}$$

$$\leq \sum_{x} q (x) \cdot \frac{e^\epsilon \Pr [\pi] \cdot \Pr_{S_j \sim \mathcal{P}} [S_j = x]}{\Pr[\pi]}

= e^\epsilon \cdot q (\mathcal{P})$$

Here, the inequality follows from the definition of differential privacy, which controls the effect that fixing a single element of the dataset to any value $(S_j = x)$ can have on the probability of any transcript: it can increase it multiplicatively by a factor of at most $e^\epsilon$.

$$\leq \sum_{x} q (x) \cdot \frac{e^\epsilon \Pr [\pi] \cdot \Pr_{S_j \sim \mathcal{P}} [S_j = x]}{\Pr[\pi]}

= e^\epsilon \cdot q (\mathcal{P})$$

Here, the inequality follows from the definition of differential privacy, which controls the effect that fixing a single element of the dataset to any value $(S_j = x)$ can have on the probability of any transcript: it can increase it multiplicatively by a factor of at most $e^\epsilon$.

And thats it: So we must have that (with probability 1!), $|q(Q_\pi) - q(\mathcal{P})| \leq e^\epsilon-1 \approx \epsilon$. The transfer theorem then follows from the triangle inequality. We get a high probability bound for free, with no need for any heavy machinery.

The argument is just a little more delicate in the case of $(\epsilon,\delta)$-differential privacy, and can be extended beyond linear queries --- but I think this gives the basic idea. The details are in our new "JLNRSS" paper. Incidentally, once nice thing about having many different proofs of the same theorem is that you can start to see some commonalities. One seems to be: it takes six authors to prove a transfer theorem!

Tuesday, May 28, 2019

Individual Notions of Fairness You Can Use

Individual Notions of Fairness You Can Use

Our group at Penn has been thinking about when individual notions of fairness might be practically achievable for awhile, and we have two new approaches.

Background:

Statistical Fairness

I've written about this before, here. But briefly: there are two families of definitions in the fairness in machine learning literature. The first group of definitions, which I call statistical fairness notions, is far and away the most popular. If you want to come up with your own statistical fairness notion, you can follow this recipe:

- Partition the world into a small number of "protected sub-groups". You will probably be thinking along the lines of race or gender or something similar when you do this.

- Pick your favorite error/accuracy metric for a classifier. This might literally be classification error, or false positive or false negative rate, or positive predictive value, or something else. Lots of options here.

- Ask that this metric be approximately equalized across your protected groups.

- Finally, enjoy your new statistical fairness measure! Congratulations!

These definitions are far and away the most popular in this literature, in large part (I think) because they are so immediately actionable. Because they are defined as conditions on a small number of expectations, you can easily check whether your classifier is "fair" according to these metrics, and (although there are some interesting computational challenges) go and try and learn classifiers subject to these constraints.

Their major problem is related to the reason for their success: they are defined as conditions on a small number of expectations or averages over people, and so they don't promise much to particular individuals. I'll borrow an example from our fairness gerrymandering paper from a few years ago to put this in sharp relief. Imagine that we are building a system to decide who to incarcerate, and we want to be "fair" with respect to both gender (men and women) and race (green and blue people). We decide that in our scenario, it is the false positives who are harmed (innocent people sent to jail), and so to be fair, we decide should equalize the false positive rate: across men and women, and across greens and blues. But one way to do this is to jail all green men and blue women. This does indeed equalize the false positive rate (at 50%) across all four of the groups we specified, but is cold comfort if you happen to be a green man --- since then you will be jailed with certainty. The problem was our fairness constraint was never a promise to an individual to begin with, just a promise about the average behavior of our classifier over a large group. And although this is a toy example constructed to make a point, things like this happen in real data too.

Individual Fairness

Individual notions of fairness, on the other hand, really do correspond to promises made to individuals. There are at least two kinds of individual fairness definitions that have been proposed: metric fairness, and weakly meritocratic fairness. Metric fairness proposes that the learner will be handed a task specific similarity metric, and requires that individuals who are close together in the metric should have a similar probability of being classified as positive. Weakly meritocratic fairness, on the other hand, takes the (unknown) labels of an individual as a measure of merit, and requires that individuals who have a higher probability of really having a positive label should have only a higher probability of being classified as positive. This in particular implies that false positive and false negative rates should be equalized across individuals, where now the word rate is averaging over only the randomness of the classifier, not over people. What makes both of these individual notions of fairness is that they impose constraints that bind on all pairs of individuals and not just over averages of people.

Definitions like this have the advantage of strong individual-level semantics, which the statistical definitions don't have. But they also have big problems: for metric fairness, the obvious question is: where does the metric come from? Even granting that fairness should be some Lipschitz condition on a metric, it seems hard to pin down what the metric is, and different people will disagree: coming up with the metric seems to encapsulate a large part of the original problem of defining fairness. For weakly meritocratic fairness, the obvious problem is that we don't know what the labels are. Its possible to do non-trivial things if you make assumptions about the label generating process, but its not at all clear you can do any non-trivial learning subject to this constraint if you don't make strong assumptions.

Two New Approaches:

We have two new approaches, building off of metric fairness and weakly meritocratic fairness respectively. Both have the advantages of statistical notions of fairness in that they can be put into practice without making unrealistic assumptions about the data, and without needing to wait on someone to hand us a metric. But they continue to make meaningful promises to individuals.

Subjective Individual Fairness

Lets start with our variant of metric fairness, which we call subjective individual fairness. (This is joint work with Michael Kearns, our PhD students Chris Jung and Seth Neel, our former PhD student Steven Wu, and Steven's student (our grand student!) Logan Stapleton). The paper is here: https://arxiv.org/abs/1905.10660. We stick with the premise that "similar people should be treated similarly", and that whether or not it is correct/just/etc., it is at least fair to treat two people the same way, in the sense that we classify them as positive with the same probability. But we don't want to assume anything else.

Suppose I were to create a machine learning fairness panel: I could recruit "AI Ethics" experts, moral philosophers, hyped up consultants, people off the street, toddlers, etc. I would expect that there would be as many different conceptions of fairness as there were people on the panel, and that none of them could precisely quantify what they meant by fairness --- certainly not in the form of a "fairness metric". But I could still ask these people, in particular cases, if they thought it was fair that two particular individuals be treated differently or not.

Of course, I would have no reason to expect that the responses that I got from the different panelists would be consistent with one another --- or possibly even internally consistent (we won't assume, e.g. that the responses satisfy any kind of triangle inequality). Nevertheless, once we fix a data distribution and a group of people who have opinions about fairness, we have a well defined tradeoff we can hope to manage: any classifier we could choose will have both:

- Some error rate, and

- Some frequency with which it makes a pair of decisions that someone in the group finds unfair.

We can hope to find classifiers that optimally trade off 1 and 2: note this is a coherent tradeoff even though we haven't forced the people to try and express their conceptions of fairness into some consistent metric. What we show is that you can do this.

Specifically, given a set of pairs that we have determined should be treated similarly, there is an oracle efficient algorithm that can find the optimal classifier subject to the constraint that no pair of individuals that has been specified as a constraint should have a substantially different probability of positive classification. Oracle efficiency means that what we can do is reduce the "fair learning" problem to a regular old learning problem, without fairness constraints. If we can solve the regular learning problem, we can also solve the fair learning problem. This kind of fairness constraint also generalizes in the standard way: if you ask your fairness panel about a reasonably small number of pairs, and then solve the in-sample problem subject to these constraints, the classifier you learn will also satisfy the fairness constraints out of sample. And it works: we implement the algorithm and try it out on the COMPAS data set, with fairness constraints that we elicited from 43 human (undergrad) subjects. The interesting thing is that once you have an algorithm like this, it isn't only a tool to create "fair" machine learning models: its also a new instrument to investigate human conceptions of fairness. We already see quite a bit of variation among our 43 subjects in our preliminary experiments. We plan to pursue this direction more going forward.

Average Individual Fairness

Next, our variant of weakly meritocratic fairness. This is joint work with Michael Kearns and our student Saeed Sharifi. The paper is here: https://arxiv.org/abs/1905.10607. In certain scenarios, it really does seem tempting to think about fairness in terms of false positive rates. Criminal justice is a great example, in the sense that it is clear that everyone agrees on which outcome they want (they would like to be released from jail), and so the people we are being unfair to really do seem to be the false positives: the people who should have been released from jail, but who were mistakenly incarcerated for longer. So in our "fairness gerrymandering" example above, maybe the problem with thinking about false positive rates wasn't a problem with false positives, but with rates: i.e. the problem was that the word rate averaged over many people, and so it didn't promise you anything. Our idea is to redefine the word rate.

In some (but certainly not all) settings, people are subject to not just one, but many classification tasks. For example, consider online advertising: you might be shown thousands of targeted ads each month. Or applying for schools (a process that is centralized in cities like New York): you apply not just to one school, but to many. In situations like this, we can model the fact that we have not just a distribution over people, but also a distribution over (or collection of) problems.

Once we have a distribution over problems, we can define the error rate, or false positive rate, or any other rate you like for individuals. It is now sensible to talk about Alice's false positive rate, or Bob's error rate, because rate has been redefined as an average over problems, for a particular individual. So we can now ask for individual fairness notions in the spirit of the statistical notions of fairness we discussed above! We no longer need to define protected groups: we can now ask that the false positive rates, or error rates, be equalized across all pairs of people.

It turns out that given a reasonably sized sample of people, and a reasonably sized sample of problems, it is tractable to find the optimal classifier subject to constraints like this in sample, and that these guarantees generalize out of sample. The in-sample algorithm is again an oracle-efficient algorithm, or in other words, a reduction to standard, unconstrained learning. The generalization guarantee here is a little interesting, because now we are talking about simultaneous generalization in two different directions: to people we haven't seen before, and also to problems we haven't seen before. This requires thinking a little bit about what kind of object we are even trying to output: a mapping from new problems to classifiers. The details are in the paper (spoiler --- the mapping is defined by the optimal dual variables for the empirical risk minimization problem): here, I'll just point out that again, the algorithm is practical to implement, and we perform some simple experiments with it.

Tuesday, April 09, 2019

The Ethical Algorithm

I've had the good fortune to be able work on a number of research topics so far: including privacy, fairness, algorithmic game theory, and adaptive data analysis, and the relationship between all of these things and machine learning. As an academic, we do a lot of writing about the things we work on, but usually our audience is narrow and technical: other researchers in our sub-specialty. But it can be both fun and important to communicate to a wider audience as well. So my amazing colleague Michael Kearns and I wrote a book, called The Ethical Algorithm. It's coming out in October (Amazon says the release date is November 1st, but my understanding is that pre-orders will start shipping on October 4).

This was the first time for either of us writing something like this: it's not a textbook, it's a "trade book" -- a popular science book. Its intended readership isn't just computer science PhDs, but the educated public broadly. But there should be plenty in it to interest experts too, because we cover quite a bit of ground. The book is about the problems that arise when algorithmic decision making interacts with human beings --- and the emerging science about how to fix them. The topics we cover include privacy, fairness, strategic interactions and gaming, and the scientific reproducibility crisis.

Lots has been written about the problems that can arise, especially related to privacy and fairness. And we're not trying to reinvent the wheel. Instead, the focus of our book is the exciting and embryonic algorithmic science that has grown to address these issues.

The privacy chapter develops differential privacy, and its strengths and weaknesses. The fairness chapter covers recent work on algorithmic fairness that has come out of the computer science literature. The gaming chapter studies how algorithm design can affect the equilibria that emerge from large scale interactions. The reproducibility chapter explores the underlying issues that lead to false discovery, and recent algorithmic advances that hold promise in avoiding them. We try and set expectations appropriately. We don't pretend that the solutions to complex societal problems can be entirely (or even primarily) algorithmic. But we argue that embedding social values into algorithms will inevitably form an important component of any solution.

I'm really excited for it to come out. If you want, you can pre-order it now, either at Amazon: https://www.amazon.com/Ethical-Algorithm-Science-Socially-Design/dp/0190948205 or directly from the publisher: https://global.oup.com/academic/product/the-ethical-algorithm-9780190948207?cc=us&lang=en&#.XKegC9eWi2w.twitter

This was the first time for either of us writing something like this: it's not a textbook, it's a "trade book" -- a popular science book. Its intended readership isn't just computer science PhDs, but the educated public broadly. But there should be plenty in it to interest experts too, because we cover quite a bit of ground. The book is about the problems that arise when algorithmic decision making interacts with human beings --- and the emerging science about how to fix them. The topics we cover include privacy, fairness, strategic interactions and gaming, and the scientific reproducibility crisis.

Lots has been written about the problems that can arise, especially related to privacy and fairness. And we're not trying to reinvent the wheel. Instead, the focus of our book is the exciting and embryonic algorithmic science that has grown to address these issues.

The privacy chapter develops differential privacy, and its strengths and weaknesses. The fairness chapter covers recent work on algorithmic fairness that has come out of the computer science literature. The gaming chapter studies how algorithm design can affect the equilibria that emerge from large scale interactions. The reproducibility chapter explores the underlying issues that lead to false discovery, and recent algorithmic advances that hold promise in avoiding them. We try and set expectations appropriately. We don't pretend that the solutions to complex societal problems can be entirely (or even primarily) algorithmic. But we argue that embedding social values into algorithms will inevitably form an important component of any solution.

I'm really excited for it to come out. If you want, you can pre-order it now, either at Amazon: https://www.amazon.com/Ethical-Algorithm-Science-Socially-Design/dp/0190948205 or directly from the publisher: https://global.oup.com/academic/product/the-ethical-algorithm-9780190948207?cc=us&lang=en&#.XKegC9eWi2w.twitter

Tuesday, February 26, 2019

Impossibility Results in Fairness as Bayesian Inference

One of the most striking results about fairness in machine learning is the impossibility result that Alexandra Chouldechova, and separately Jon Kleinberg, Sendhil Mullainathan, and Manish Raghavan discovered a few years ago. These papers say something very crisp. I'll focus here on the binary classification setting that Alex studies because it is much simpler. There are (at least) three reasonable properties you would want your "fair" classifiers to have. They are:

- False Positive Rate Balance: The rate at which your classifier makes errors in the positive direction (i.e. labels negative examples positive) should be the same across groups.

- False Negative Rate Balance: The rate at which your classifier makes errors in the negative direction (i.e. labels positive examples negative) should be the same across groups.

- Predictive Parity: The statistical "meaning" of a positive classification should be the same across groups (we'll be more specific about what this means in a moment)

What Chouldechova and KMR show is that if you want all three, you are out of luck --- unless you are in one of two very unlikely situations: Either you have a perfect classifier that never errs, or the base rate is exactly the same for both populations --- i.e. both populations have exactly the same frequency of positive examples. If you don't find yourself in one of these two unusual situations, then you have to give up on properties 1, 2, or 3.

This is discouraging, because there are good reasons to want each of properties 1, 2, and 3. And these aren't measures made up in order to formulate an impossibility result --- they have their root in the Propublica/COMPASS controversy. Roughly speaking, Propublica discovered that the COMPASS recidivism prediction algorithm violated false positive and negative rate balance, and they took the position that this made the classifier unfair. Northpointe (the creators of the COMPASS algorithm) responded by saying that their algorithm satisfied predictive parity, and took the position that this made the classifier fair. They were seemingly talking past each other by using two different definitions of what "fair" should mean. What the impossibility result says is that there is no way to satisfy both sides of this debate.

So why is this result true? The proof in Alex's paper can't be made simpler --- its already a one liner, following from an algebraic identity. But the first time I read it I didn't have a great intuition for why it held. Viewing the statement through the lens of Bayesian inference made the result very intuitive (at least for me). With this viewpoint, all the impossibility result is saying is: "If you have different priors about some event (say that a released inmate will go on to commit a crime) for two different populations, and you receive evidence of the same strength for both populations, then you will have different posteriors as well". This is now bordering on obvious --- because your posterior belief about an event is a combination of your prior belief and the new evidence you have received, weighted by the strength of that evidence.

Lets walk through this. Suppose we have two populations, call them $A$s and $B$s. Individuals $x$ from these populations have some true binary label $\ell(x) \in \{0,1\}$ which we are trying to predict. Individuals from the two populations are drawn from different distributions, which we'll call $D_A$ and $D_B$. We have some classifier that predicts labels $\hat\ell(x)$, and we would like it to satisfy the three fairness criteria defined above. First, lets define some terms:

The base rate for a population $i$ is just the frequency of positive labels:

$$p_i = \Pr_{x \sim D_i}[\ell(x) = 1].$$

The false positive and false negative rates of the classifier are:

$$FPR_i = \Pr_{x \sim D_i}[\hat\ell(x) = 1 | \ell(x) = 0] \ \ \ \ FNR_i = \Pr_{x \sim D_i}[\hat\ell(x) = 0 | \ell(x) = 1].$$

And the positive predictive value of the classifier is:

$$PPV_i = \Pr_{x \sim D_i}[\ell(x) = 1 | \hat\ell(x)=1].$$

The base rate for a population $i$ is just the frequency of positive labels:

$$p_i = \Pr_{x \sim D_i}[\ell(x) = 1].$$

The false positive and false negative rates of the classifier are:

$$FPR_i = \Pr_{x \sim D_i}[\hat\ell(x) = 1 | \ell(x) = 0] \ \ \ \ FNR_i = \Pr_{x \sim D_i}[\hat\ell(x) = 0 | \ell(x) = 1].$$

And the positive predictive value of the classifier is:

$$PPV_i = \Pr_{x \sim D_i}[\ell(x) = 1 | \hat\ell(x)=1].$$

Satisfying all three fairness constraints just means finding a classifier such that $FPR_A = FPR_B$, $FNR_A = FNR_B$, and $PPV_A = PPV_B$.

How should we prove that this is impossible? All three of these quantities are conditional probabilities, so we are essentially obligated to apply Bayes Rule:

$$PPV_i = \Pr_{x \sim D_i}[\ell(x) = 1 | \hat\ell(x)=1] = \frac{ \Pr_{x \sim D_i}[\hat\ell(x)=1 | \ell(x) = 1]\cdot \Pr_{x \sim D_i} [\ell(x) = 1]}{ \Pr_{x \sim D_i}[\hat \ell(x) = 1]}$$

But now these quantities on the right hand side are things we have names for. Substituting in, we get:

$$PPV_i = \frac{p_i(1-FNR_i)}{p_i(1-FNR_i) + (1-p_i)FPR_i}$$

And so now we see the problem. Suppose we have $FNR_A = FNR_B$ and $FPR_A = FPR_B$. Can we have $PPV_A = PPV_B$? There are only two ways. If $p_A = p_B$, then we are done, because the right hand side is the same for either $i \in \{A,B\}$. But if the base rates are different, then the only way to make these two quantities equal is if $FNR_i = FPR_i = 0$ --- i.e. if our classifier is perfect.

The piece of intuition here is that the base rate is our prior belief that $\ell(x) = 1$, before we see the output of the classifier. The positive predictive value is our posterior belief that $\ell(x) = 1$, after we see the output of the classifier. And all we need to know about the classifier in order to apply Bayes rule to derive our posterior from our prior is its false positive rate and its false negative rate --- these fully characterize the "strength of the evidence." Hence: "If our prior probabilities differ, and we see evidence of a positive label of the same strength, then our posterior probabilities will differ as well."

Once you realize this, then you can generalize the fairness impossibility result to other settings by making equally obvious statements about probability elsewhere. :-)

For example, suppose we generalize the labels to be real valued instead of binary --- so when making decisions, we can model individuals using shades of gray. (e.g. in college admissions, we don't have to model individuals as "qualified" or not, but rather can model talent as a real value.) Lets fix a model for concreteness, but the particulars are not important. (The model here is related to my paper with Sampath Kannan and Juba Ziani on the downstream effects of affirmative action)

Suppose that in population $i$, labels are distributed according to a Gaussian distribution with mean $\mu_i$: $\ell(x) \sim N(\mu_i, 1)$. For an individual from group $i$, we have a test that gives an unbiased estimator of their label, with some standard deviation $\sigma_i$: $\hat \ell(x) \sim N(\ell(x), \sigma_i)$.

In a model like this, we have analogues of our fairness desiderata in the binary case:

But we can see from inspection these are the only two cases. If $\sigma_A = \sigma_B$, but the prior means are different, then the posterior means will be different for every $t$. This is really the same impossibility result as in the binary case: all it is saying is that if I have different priors about different groups, but the evidence I receive has the same strength, then my posteriors will also be different.

So the mathematical fact is simple --- but its implications remain deep. It means we have to choose between equalizing a decision maker's posterior about the label of an individual, or providing an equally accurate signal about each individual, and that we cannot have both. Unfortunately, living without either one of these conditions can lead to real harm.

How should we prove that this is impossible? All three of these quantities are conditional probabilities, so we are essentially obligated to apply Bayes Rule:

$$PPV_i = \Pr_{x \sim D_i}[\ell(x) = 1 | \hat\ell(x)=1] = \frac{ \Pr_{x \sim D_i}[\hat\ell(x)=1 | \ell(x) = 1]\cdot \Pr_{x \sim D_i} [\ell(x) = 1]}{ \Pr_{x \sim D_i}[\hat \ell(x) = 1]}$$

But now these quantities on the right hand side are things we have names for. Substituting in, we get:

$$PPV_i = \frac{p_i(1-FNR_i)}{p_i(1-FNR_i) + (1-p_i)FPR_i}$$

And so now we see the problem. Suppose we have $FNR_A = FNR_B$ and $FPR_A = FPR_B$. Can we have $PPV_A = PPV_B$? There are only two ways. If $p_A = p_B$, then we are done, because the right hand side is the same for either $i \in \{A,B\}$. But if the base rates are different, then the only way to make these two quantities equal is if $FNR_i = FPR_i = 0$ --- i.e. if our classifier is perfect.

The piece of intuition here is that the base rate is our prior belief that $\ell(x) = 1$, before we see the output of the classifier. The positive predictive value is our posterior belief that $\ell(x) = 1$, after we see the output of the classifier. And all we need to know about the classifier in order to apply Bayes rule to derive our posterior from our prior is its false positive rate and its false negative rate --- these fully characterize the "strength of the evidence." Hence: "If our prior probabilities differ, and we see evidence of a positive label of the same strength, then our posterior probabilities will differ as well."

Once you realize this, then you can generalize the fairness impossibility result to other settings by making equally obvious statements about probability elsewhere. :-)

For example, suppose we generalize the labels to be real valued instead of binary --- so when making decisions, we can model individuals using shades of gray. (e.g. in college admissions, we don't have to model individuals as "qualified" or not, but rather can model talent as a real value.) Lets fix a model for concreteness, but the particulars are not important. (The model here is related to my paper with Sampath Kannan and Juba Ziani on the downstream effects of affirmative action)

Suppose that in population $i$, labels are distributed according to a Gaussian distribution with mean $\mu_i$: $\ell(x) \sim N(\mu_i, 1)$. For an individual from group $i$, we have a test that gives an unbiased estimator of their label, with some standard deviation $\sigma_i$: $\hat \ell(x) \sim N(\ell(x), \sigma_i)$.

In a model like this, we have analogues of our fairness desiderata in the binary case:

- Analogue of Error Rate Balance: We would like our test to be equally informative about both populations: $\sigma_A = \sigma_B$.

- Analogue of Predictive Parity: Any test score $t$ should induce the same posterior expectation on true labels across populations: $$E_{D_A}[\ell(x) | \hat \ell(x) = t] = E_{D_B}[\ell(x) | \hat \ell(x) = t]$$

Can we satisfy both of these conditions at the same time? Because the normal distribution is self conjugate (that's why we chose it!) Bayes Rule simplifies to have a nice closed form, and we can compute our posteriors as follows:

$$E_{D_i}[\ell(x) | \hat \ell(x) = t] = \frac{\sigma_i^2}{\sigma_i^2 + 1}\cdot \mu_i + \frac{1}{\sigma_i^2 + 1}\cdot t$$

So there are only two ways we can achieve both properties:

- We can of course satisfy both conditions if the prior distributions are the same for both groups: $\mu_A = \mu_B$. Then we can set $\sigma_A = \sigma_B$ and observe that the right hand side of the above expression is identical for $i \in \{A, B\}$.

- We can also satisfy both conditions if the prior means are different, but the signal is perfect: i.e. $\sigma_A = \sigma_B = 0$. (Then both posterior means are just $t$, independent of the prior means).

So the mathematical fact is simple --- but its implications remain deep. It means we have to choose between equalizing a decision maker's posterior about the label of an individual, or providing an equally accurate signal about each individual, and that we cannot have both. Unfortunately, living without either one of these conditions can lead to real harm.

Saturday, January 26, 2019

Algorithmic Unfairness Without Any Bias Baked In

Discussion of (un)fairness in machine learning hit mainstream political discourse this week, when Representative Alexandria Ocasio-Cortez discussed the possibility of algorithmic bias, and was clumsily "called out" by Ryan Saavedra on twitter:

It was gratifying to see the number of responses pointing out how wrong he was --- awareness of algorithmic bias has clearly become pervasive! But most of the pushback focused on the possibility of bias being "baked in" by the designer of the algorithm, or because of latent bias embedded in the data, or both:

Bias in the data is certainly a problem, especially when labels are gathered by human beings. But its far from being the only problem. In this post, I want to walk through a very simple example in which the algorithm designer is being entirely reasonable, there are no human beings injecting bias into the labels, and yet the resulting outcome is "unfair".

Here is the (toy) scenario -- the specifics aren't important. High school students are applying to college, and each student has some innate "talent" $I$, which we will imagine is normally distributed, with mean 100 and standard deviation 15: $I \sim N(100,15)$. The college would like to admit students who are sufficiently talented --- say one standard deviation above the mean (so, it would like to admit students with $I \geq 115$). The problem is that talent isn't directly observable. Instead, the college can observe grades $g$ and SAT scores $s$, which are a noisy estimate of talent. For simplicity, lets imagine that both grades and SAT scores are independently and normally distributed, centered at a student's talent level, and also with standard deviation 15: $g \sim N(I, 15)$, $s \sim N(I, 15)$.

In this scenario, the college has a simple, optimal decision rule: It should run a linear regression to try and predict student talent from grades and SAT scores, and then it should admit the students whose predicted talent is at least 115. This is indeed "driven by math" --- since we assumed everything was normally distributed here, this turns out to correspond to the Bayesian optimal decision rule for the college.

Ok. Now lets suppose there are two populations of students, which we will call Reds and Blues. Reds are the majority population, and Blues are a small minority population --- the Blues's only make up about 1% of the student body. But the Reds and the Blues are no different when it comes to talent: they both have the same talent distribution, as described above. And there is no bias baked into the grading or the exams: both the Reds and the Blues also have exactly the same grade and exam score distributions, as described above.

But there is one difference: the Blues have a bit more money than the Reds, so they each take the SAT twice, and report only the highest of the two scores to the college. This results in a small but noticeable bump in their average SAT scores, compared to the Reds. Here are the grades and exam scores for the two populations, plotted:

So what is the effect of this when we use our reasonable inference procedure? First, lets consider what happens when we learn two different regression models: one for the Blues, and a different one for the Reds. We don't see much difference:

The Red classifier makes errors approximately 11% of the time. The Blue classifier does about the same --- it makes errors about 10.4% of the time. This makes sense: the Blues artificially inflated their SAT score distribution without increasing their talent, and the classifier picked up on this and corrected for it. In fact, it is even a little more accurate!

And since we are interested in fairness, lets think about the false negative rate of our classifiers. "False Negatives" in this setting are the people who are qualified to attend the college ($I > 115$), but whom the college mistakenly rejects. These are really the people who have come to harm as a result of the classifier's mistakes. And the False Negative Rate is the probability that a randomly selected qualified person is mistakenly rejected from college --- i.e. the probability that a randomly selected student is harmed by the classifier. We should want that the false negative rates are approximately equal across the two populations: this would mean that the burden of harm caused by the classifier's mistakes is not disproportionately borne by one population over the other. This is one reason why the difference between false negative rates across different populations has become a standard fairness metric in algorithmic fairness --- sometimes referred to as "equal opportunity."

So how do we fare on this metric? Not so badly! The Blue model has a false negative rate of 50% on the blues, and the Red model has a false negative rate of 47% on the reds --- so the difference between these two is a satisfyingly small 3%.

But you might reasonably object: because we have learned separate models for the Blues and the Reds, we are explicitly making admissions decisions as a function of a student's color! This might sound like a form of discrimination, baked in by the algorithm designer --- and if the two populations represent e.g. racial groups, then its explicitly illegal in a number of settings, including lending.

So what happens if we don't allow our classifier to see group membership, and just train one classifier on the whole student body? The gap in false negative rates between the two populations balloons to 12.5%, and the overall error rate ticks up. This means if you are a qualified member of the Red population, you are substantially more likely to be mistakenly rejected by our classifier than if you are a qualified member of the Blue population.

What happened? There wasn't any malice anywhere in this data pipeline. Its just that the Red population was much larger than the Blue population, so when we trained a classifier to minimize its average error over the entire student body, it naturally fit the Red population --- which contributed much more to the average. But this means that the classifier was no longer compensating for the artificially inflated SAT scores of the Blues, and so was making a disproportionate number of errors on them --- all in their favor.

This is the kind of thing that happens all the time: whenever there are two populations that have different feature distributions, learning a single classifier (that is prohibited from discriminating based on population) will fit the bigger of the two populations, simply because they contribute more to average error. Depending on the nature of the distribution difference, this can be either to the benefit or the detriment of the minority population. And not only does this not involve any explicit human bias, either on the part of the algorithm designer or the data gathering process, it is exacerbated if we artificially force the algorithm to be group blind. Well intentioned "fairness" regulations prohibiting decision makers form taking sensitive attributes into account can actually make things less fair and less accurate at the same time.

Socialist Rep. Alexandria Ocasio-Cortez (D-NY) claims that algorithms, which are driven by math, are racist pic.twitter.com/X2veVvAU1H — Ryan Saavedra (@RealSaavedra) January 22, 2019

You know algorithms are written by people right? And that the data they are trained on is made and selected by people? And that the problems algorithms solve are decided...again...by people? And that people can be and many times are racist? Ok now you do the math— kade (@onekade) January 22, 2019

In this scenario, the college has a simple, optimal decision rule: It should run a linear regression to try and predict student talent from grades and SAT scores, and then it should admit the students whose predicted talent is at least 115. This is indeed "driven by math" --- since we assumed everything was normally distributed here, this turns out to correspond to the Bayesian optimal decision rule for the college.

Ok. Now lets suppose there are two populations of students, which we will call Reds and Blues. Reds are the majority population, and Blues are a small minority population --- the Blues's only make up about 1% of the student body. But the Reds and the Blues are no different when it comes to talent: they both have the same talent distribution, as described above. And there is no bias baked into the grading or the exams: both the Reds and the Blues also have exactly the same grade and exam score distributions, as described above.

But there is one difference: the Blues have a bit more money than the Reds, so they each take the SAT twice, and report only the highest of the two scores to the college. This results in a small but noticeable bump in their average SAT scores, compared to the Reds. Here are the grades and exam scores for the two populations, plotted:

The Red classifier makes errors approximately 11% of the time. The Blue classifier does about the same --- it makes errors about 10.4% of the time. This makes sense: the Blues artificially inflated their SAT score distribution without increasing their talent, and the classifier picked up on this and corrected for it. In fact, it is even a little more accurate!

And since we are interested in fairness, lets think about the false negative rate of our classifiers. "False Negatives" in this setting are the people who are qualified to attend the college ($I > 115$), but whom the college mistakenly rejects. These are really the people who have come to harm as a result of the classifier's mistakes. And the False Negative Rate is the probability that a randomly selected qualified person is mistakenly rejected from college --- i.e. the probability that a randomly selected student is harmed by the classifier. We should want that the false negative rates are approximately equal across the two populations: this would mean that the burden of harm caused by the classifier's mistakes is not disproportionately borne by one population over the other. This is one reason why the difference between false negative rates across different populations has become a standard fairness metric in algorithmic fairness --- sometimes referred to as "equal opportunity."

So how do we fare on this metric? Not so badly! The Blue model has a false negative rate of 50% on the blues, and the Red model has a false negative rate of 47% on the reds --- so the difference between these two is a satisfyingly small 3%.

But you might reasonably object: because we have learned separate models for the Blues and the Reds, we are explicitly making admissions decisions as a function of a student's color! This might sound like a form of discrimination, baked in by the algorithm designer --- and if the two populations represent e.g. racial groups, then its explicitly illegal in a number of settings, including lending.

So what happens if we don't allow our classifier to see group membership, and just train one classifier on the whole student body? The gap in false negative rates between the two populations balloons to 12.5%, and the overall error rate ticks up. This means if you are a qualified member of the Red population, you are substantially more likely to be mistakenly rejected by our classifier than if you are a qualified member of the Blue population.

What happened? There wasn't any malice anywhere in this data pipeline. Its just that the Red population was much larger than the Blue population, so when we trained a classifier to minimize its average error over the entire student body, it naturally fit the Red population --- which contributed much more to the average. But this means that the classifier was no longer compensating for the artificially inflated SAT scores of the Blues, and so was making a disproportionate number of errors on them --- all in their favor.

|

| The combined admissions rule takes everyone above the black line. Since the Blues are shifted up relative to the Reds, they are admitted at a disproportionately higher rate. |

This is the kind of thing that happens all the time: whenever there are two populations that have different feature distributions, learning a single classifier (that is prohibited from discriminating based on population) will fit the bigger of the two populations, simply because they contribute more to average error. Depending on the nature of the distribution difference, this can be either to the benefit or the detriment of the minority population. And not only does this not involve any explicit human bias, either on the part of the algorithm designer or the data gathering process, it is exacerbated if we artificially force the algorithm to be group blind. Well intentioned "fairness" regulations prohibiting decision makers form taking sensitive attributes into account can actually make things less fair and less accurate at the same time.

Thursday, January 10, 2019

2019 SIGecom Dissertation Award: Call for Nominations

Dear all,

Please consider nominating graduating Ph.D. students for the SIGecom Dissertation Award. If you are a graduating student, consider asking your adviser or other senior mentor to nominate you.

Nominations are due on February 28, 2019. This award is given to a student who defended a thesis in 2018. It is a prestigious award and is accompanied by a $1500 prize. In the past, the grand prize has been awarded to:

2017: Aviad Rubinstein, "Hardness of Approximation Between P and NP"

2016: Peng Shi, "Prediction and Optimization in School Choice"

2015: Inbal Talgam-Cohen, "Robust Market Design: Information and Computation "

2014: S. Matthew Weinberg, "Algorithms for Strategic Agents"

2013: Balasubramanian Sivan, "Prior Robust Optimization"

And the award has had seven runner-ups: Rachel Cummings, Christos Tzamos, Bo Waggoner, James Wright, Xi (Alice) Gao, Yang Cai, and Sigal Oren. You can find detailed information about the nomination process at: http://www.sigecom.org/awardd.html. We look forward to reading your nominations!

Your Award Committee,

Renato Paes Leme

Aaron Roth (Chair)

Inbal Talgam-Cohen

Please consider nominating graduating Ph.D. students for the SIGecom Dissertation Award. If you are a graduating student, consider asking your adviser or other senior mentor to nominate you.

Nominations are due on February 28, 2019. This award is given to a student who defended a thesis in 2018. It is a prestigious award and is accompanied by a $1500 prize. In the past, the grand prize has been awarded to:

2017: Aviad Rubinstein, "Hardness of Approximation Between P and NP"

2016: Peng Shi, "Prediction and Optimization in School Choice"

2015: Inbal Talgam-Cohen, "Robust Market Design: Information and Computation "

2014: S. Matthew Weinberg, "Algorithms for Strategic Agents"

2013: Balasubramanian Sivan, "Prior Robust Optimization"

And the award has had seven runner-ups: Rachel Cummings, Christos Tzamos, Bo Waggoner, James Wright, Xi (Alice) Gao, Yang Cai, and Sigal Oren. You can find detailed information about the nomination process at: http://www.sigecom.org/awardd.html. We look forward to reading your nominations!

Your Award Committee,

Renato Paes Leme

Aaron Roth (Chair)

Inbal Talgam-Cohen

Monday, March 12, 2018

Call for nominations for the SIGecom Dissertation Award

Dear all,

Please consider nominating recently graduated Ph.D. students working in algorithmic game theory/mechanism design/market design for the SIGecom Dissertation Award. If you are a graduating student, consider asking your adviser or other senior mentor to nominate you.

Nominations are due at the end of this month, March 31, 2018. This award is given to a student who defended a thesis in 2017. It is a prestigious award and is accompanied by a $1500 prize. In the past, the grand prize has been awarded to:

2016: Peng Shi, " Prediction and Optimization in School Choice"

2015: Inbal Talgam-Cohen, " Robust Market Design: Information and Computation "

2014: S. Matthew Weinberg, "Algorithms for Strategic Agents"

2013: Balasubramanian Sivan, " Prior Robust Optimization"

and the award has had five runner-ups, Bo Waggoner, James Wright, Xi (Alice) Gao, Yang Cai, and Sigal Oren. You can find detailed information about the nomination process at: http://www.sigecom.org/awardd.Nominations are due at the end of this month, March 31, 2018. This award is given to a student who defended a thesis in 2017. It is a prestigious award and is accompanied by a $1500 prize. In the past, the grand prize has been awarded to:

2016: Peng Shi, " Prediction and Optimization in School Choice"

2015: Inbal Talgam-Cohen, " Robust Market Design: Information and Computation "

2014: S. Matthew Weinberg, "Algorithms for Strategic Agents"

2013: Balasubramanian Sivan, " Prior Robust Optimization"

Sunday, March 04, 2018

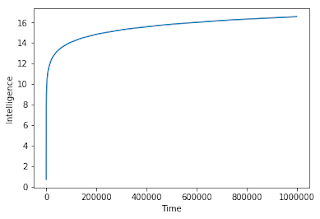

How (un)likely is an "intelligence explosion"?

First, Some Background.

A "superintelligent" AI is loosely defined to be an entity that is much better than we are at essentially any cognitive/learning/planning task. Perhaps, by analogy, a superintelligent AI is to human beings as human beings are to Bengal tigers, in terms of general intelligence. It shouldn't be hard to convince yourself that if we were in the company of a superintelligence, then we would be very right to be worried: after all, it is intelligence that allows human beings to totally dominate the world and drive Bengal tigers to near extinction, despite the fact that tigers physiologically dominate humans in most other respects. This is the case even if the superintelligence doesn't have the destruction of humanity as a goal per-se (after all, we don't have it out for tigers), and even if the superintelligence is just an unconscious but super-powerful optimization algorithm. I won't rehash the arguments here (Scott does it better) but it essentially boils down to the fact that it is quite hard to anticipate what the results of optimizing an objective function will be, if the optimization is done over a sufficiently rich space of strategies. And if we get it wrong, and the optimization has some severely unpleasant side-effects? It is tempting to suggest that at that point, we just unplug the computer and start over. The problem is that if we unplug the intelligence, it won't do as well at optimizing its objective function compared to if it took steps to prevent us from unplugging it. So if it's strategy space is rich enough so that it is able to take steps to defend itself, it will. Lots of the most interesting research in this field seems to be about how to align optimization objectives with our own desires, or simply how to write down objective functions that don't induce the optimization algorithm to try and prevent us from unplugging it, while also not incentivizing the algorithm to unplug itself (the corrigibility problem).

Ok. It seems uncontroversial that a hypothetical superintelligence would be something we should take very seriously as a danger. But isn't it premature to worry about this, given how far off it seems to be? We aren't even that good at making product recommendations, let alone optimization algorithms so powerful that they might inadvertently destroy all of humanity. Even if superintelligence will ultimately be something to take very seriously, are we even in a position to productively think about it now, given how little we know about how such a thing might work at a technical level? This seems to be the position that Andrew Ng was taking, in his much quoted statement that (paraphrasing) worrying about the dangers of super-intelligence right now is like worrying about overpopulation on Mars. Not that it might not eventually be a serious concern, but that we will get a higher return investing our intellectual efforts right now on more immediate problems.

The standard counter to this is that super-intelligence might always seem like it is well beyond our current capabilities -- maybe centuries in the future -- until, all of a sudden, it appears as the result of an uncontrollable chain reaction known as an "intelligence explosion", or "singularity". (As far as I can tell, very few people actually think that intelligence growth would exhibit an actual mathematical singularity --- this seems instead to be a metaphor for exponential growth.) If this is what we expect, then now might very well be the time to worry about super-intelligence. The first argument of this form was put forth by British mathematician I.J. Good (of Good-Turing Frequency Estimation!):

“Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.”Scott Alexander summarizes the same argument a bit more quantitatively. In this passage, he is imagining the starting point being a full-brain simulation of Einstein --- except run on faster hardware, so that our simulated Einstein operates at a much faster clock-speed than his historical namesake:

It might, like the historical Einstein, contemplate physics. Or it might contemplate an area very relevant to its own interests: artificial intelligence. In that case, instead of making a revolutionary physics breakthrough every few hours, it will make a revolutionary AI breakthrough every few hours. Each AI breakthrough it makes, it will have the opportunity to reprogram itself to take advantage of its discovery, becoming more intelligent, thus speeding up its breakthroughs further. The cycle will stop only when it reaches some physical limit – some technical challenge to further improvements that even an entity far smarter than Einstein cannot discover a way around.

To human programmers, such a cycle would look like a “critical mass”. Before the critical level, any AI advance delivers only modest benefits. But any tiny improvement that pushes an AI above the critical level would result in a feedback loop of inexorable self-improvement all the way up to some stratospheric limit of possible computing power.