I've had the good fortune to be able work on a number of research topics so far: including privacy, fairness, algorithmic game theory, and adaptive data analysis, and the relationship between all of these things and machine learning. As an academic, we do a lot of writing about the things we work on, but usually our audience is narrow and technical: other researchers in our sub-specialty. But it can be both fun and important to communicate to a wider audience as well. So my amazing colleague Michael Kearns and I wrote a book, called The Ethical Algorithm. It's coming out in October (Amazon says the release date is November 1st, but my understanding is that pre-orders will start shipping on October 4).

This was the first time for either of us writing something like this: it's not a textbook, it's a "trade book" -- a popular science book. Its intended readership isn't just computer science PhDs, but the educated public broadly. But there should be plenty in it to interest experts too, because we cover quite a bit of ground.

The book is about the problems that arise when algorithmic decision making interacts with human beings --- and the emerging science about how to fix them. The topics we cover include privacy, fairness, strategic interactions and gaming, and the scientific reproducibility crisis.

Lots has been written about the problems that can arise, especially related to privacy and fairness. And we're not trying to reinvent the wheel. Instead, the focus of our book is the exciting and embryonic algorithmic science that has grown to address these issues.

The privacy chapter develops differential privacy, and its strengths and weaknesses. The fairness chapter covers recent work on algorithmic fairness that has come out of the computer science literature. The gaming chapter studies how algorithm design can affect the equilibria that emerge from large scale interactions. The reproducibility chapter explores the underlying issues that lead to false discovery, and recent algorithmic advances that hold promise in avoiding them.

We try and set expectations appropriately. We don't pretend that the solutions to complex societal problems can be entirely (or even primarily) algorithmic. But we argue that embedding social values into algorithms will inevitably form an important component of any solution.

I'm really excited for it to come out. If you want, you can pre-order it now, either at Amazon: https://www.amazon.com/Ethical-Algorithm-Science-Socially-Design/dp/0190948205 or directly from the publisher: https://global.oup.com/academic/product/the-ethical-algorithm-9780190948207?cc=us&lang=en&#.XKegC9eWi2w.twitter

Tuesday, April 09, 2019

Tuesday, February 26, 2019

Impossibility Results in Fairness as Bayesian Inference

One of the most striking results about fairness in machine learning is the impossibility result that Alexandra Chouldechova, and separately Jon Kleinberg, Sendhil Mullainathan, and Manish Raghavan discovered a few years ago. These papers say something very crisp. I'll focus here on the binary classification setting that Alex studies because it is much simpler. There are (at least) three reasonable properties you would want your "fair" classifiers to have. They are:

- False Positive Rate Balance: The rate at which your classifier makes errors in the positive direction (i.e. labels negative examples positive) should be the same across groups.

- False Negative Rate Balance: The rate at which your classifier makes errors in the negative direction (i.e. labels positive examples negative) should be the same across groups.

- Predictive Parity: The statistical "meaning" of a positive classification should be the same across groups (we'll be more specific about what this means in a moment)

What Chouldechova and KMR show is that if you want all three, you are out of luck --- unless you are in one of two very unlikely situations: Either you have a perfect classifier that never errs, or the base rate is exactly the same for both populations --- i.e. both populations have exactly the same frequency of positive examples. If you don't find yourself in one of these two unusual situations, then you have to give up on properties 1, 2, or 3.

This is discouraging, because there are good reasons to want each of properties 1, 2, and 3. And these aren't measures made up in order to formulate an impossibility result --- they have their root in the Propublica/COMPASS controversy. Roughly speaking, Propublica discovered that the COMPASS recidivism prediction algorithm violated false positive and negative rate balance, and they took the position that this made the classifier unfair. Northpointe (the creators of the COMPASS algorithm) responded by saying that their algorithm satisfied predictive parity, and took the position that this made the classifier fair. They were seemingly talking past each other by using two different definitions of what "fair" should mean. What the impossibility result says is that there is no way to satisfy both sides of this debate.

So why is this result true? The proof in Alex's paper can't be made simpler --- its already a one liner, following from an algebraic identity. But the first time I read it I didn't have a great intuition for why it held. Viewing the statement through the lens of Bayesian inference made the result very intuitive (at least for me). With this viewpoint, all the impossibility result is saying is: "If you have different priors about some event (say that a released inmate will go on to commit a crime) for two different populations, and you receive evidence of the same strength for both populations, then you will have different posteriors as well". This is now bordering on obvious --- because your posterior belief about an event is a combination of your prior belief and the new evidence you have received, weighted by the strength of that evidence.

Lets walk through this. Suppose we have two populations, call them $A$s and $B$s. Individuals $x$ from these populations have some true binary label $\ell(x) \in \{0,1\}$ which we are trying to predict. Individuals from the two populations are drawn from different distributions, which we'll call $D_A$ and $D_B$. We have some classifier that predicts labels $\hat\ell(x)$, and we would like it to satisfy the three fairness criteria defined above. First, lets define some terms:

The base rate for a population $i$ is just the frequency of positive labels:

$$p_i = \Pr_{x \sim D_i}[\ell(x) = 1].$$

The false positive and false negative rates of the classifier are:

$$FPR_i = \Pr_{x \sim D_i}[\hat\ell(x) = 1 | \ell(x) = 0] \ \ \ \ FNR_i = \Pr_{x \sim D_i}[\hat\ell(x) = 0 | \ell(x) = 1].$$

And the positive predictive value of the classifier is:

$$PPV_i = \Pr_{x \sim D_i}[\ell(x) = 1 | \hat\ell(x)=1].$$

The base rate for a population $i$ is just the frequency of positive labels:

$$p_i = \Pr_{x \sim D_i}[\ell(x) = 1].$$

The false positive and false negative rates of the classifier are:

$$FPR_i = \Pr_{x \sim D_i}[\hat\ell(x) = 1 | \ell(x) = 0] \ \ \ \ FNR_i = \Pr_{x \sim D_i}[\hat\ell(x) = 0 | \ell(x) = 1].$$

And the positive predictive value of the classifier is:

$$PPV_i = \Pr_{x \sim D_i}[\ell(x) = 1 | \hat\ell(x)=1].$$

Satisfying all three fairness constraints just means finding a classifier such that $FPR_A = FPR_B$, $FNR_A = FNR_B$, and $PPV_A = PPV_B$.

How should we prove that this is impossible? All three of these quantities are conditional probabilities, so we are essentially obligated to apply Bayes Rule:

$$PPV_i = \Pr_{x \sim D_i}[\ell(x) = 1 | \hat\ell(x)=1] = \frac{ \Pr_{x \sim D_i}[\hat\ell(x)=1 | \ell(x) = 1]\cdot \Pr_{x \sim D_i} [\ell(x) = 1]}{ \Pr_{x \sim D_i}[\hat \ell(x) = 1]}$$

But now these quantities on the right hand side are things we have names for. Substituting in, we get:

$$PPV_i = \frac{p_i(1-FNR_i)}{p_i(1-FNR_i) + (1-p_i)FPR_i}$$

And so now we see the problem. Suppose we have $FNR_A = FNR_B$ and $FPR_A = FPR_B$. Can we have $PPV_A = PPV_B$? There are only two ways. If $p_A = p_B$, then we are done, because the right hand side is the same for either $i \in \{A,B\}$. But if the base rates are different, then the only way to make these two quantities equal is if $FNR_i = FPR_i = 0$ --- i.e. if our classifier is perfect.

The piece of intuition here is that the base rate is our prior belief that $\ell(x) = 1$, before we see the output of the classifier. The positive predictive value is our posterior belief that $\ell(x) = 1$, after we see the output of the classifier. And all we need to know about the classifier in order to apply Bayes rule to derive our posterior from our prior is its false positive rate and its false negative rate --- these fully characterize the "strength of the evidence." Hence: "If our prior probabilities differ, and we see evidence of a positive label of the same strength, then our posterior probabilities will differ as well."

Once you realize this, then you can generalize the fairness impossibility result to other settings by making equally obvious statements about probability elsewhere. :-)

For example, suppose we generalize the labels to be real valued instead of binary --- so when making decisions, we can model individuals using shades of gray. (e.g. in college admissions, we don't have to model individuals as "qualified" or not, but rather can model talent as a real value.) Lets fix a model for concreteness, but the particulars are not important. (The model here is related to my paper with Sampath Kannan and Juba Ziani on the downstream effects of affirmative action)

Suppose that in population $i$, labels are distributed according to a Gaussian distribution with mean $\mu_i$: $\ell(x) \sim N(\mu_i, 1)$. For an individual from group $i$, we have a test that gives an unbiased estimator of their label, with some standard deviation $\sigma_i$: $\hat \ell(x) \sim N(\ell(x), \sigma_i)$.

In a model like this, we have analogues of our fairness desiderata in the binary case:

But we can see from inspection these are the only two cases. If $\sigma_A = \sigma_B$, but the prior means are different, then the posterior means will be different for every $t$. This is really the same impossibility result as in the binary case: all it is saying is that if I have different priors about different groups, but the evidence I receive has the same strength, then my posteriors will also be different.

So the mathematical fact is simple --- but its implications remain deep. It means we have to choose between equalizing a decision maker's posterior about the label of an individual, or providing an equally accurate signal about each individual, and that we cannot have both. Unfortunately, living without either one of these conditions can lead to real harm.

How should we prove that this is impossible? All three of these quantities are conditional probabilities, so we are essentially obligated to apply Bayes Rule:

$$PPV_i = \Pr_{x \sim D_i}[\ell(x) = 1 | \hat\ell(x)=1] = \frac{ \Pr_{x \sim D_i}[\hat\ell(x)=1 | \ell(x) = 1]\cdot \Pr_{x \sim D_i} [\ell(x) = 1]}{ \Pr_{x \sim D_i}[\hat \ell(x) = 1]}$$

But now these quantities on the right hand side are things we have names for. Substituting in, we get:

$$PPV_i = \frac{p_i(1-FNR_i)}{p_i(1-FNR_i) + (1-p_i)FPR_i}$$

And so now we see the problem. Suppose we have $FNR_A = FNR_B$ and $FPR_A = FPR_B$. Can we have $PPV_A = PPV_B$? There are only two ways. If $p_A = p_B$, then we are done, because the right hand side is the same for either $i \in \{A,B\}$. But if the base rates are different, then the only way to make these two quantities equal is if $FNR_i = FPR_i = 0$ --- i.e. if our classifier is perfect.

The piece of intuition here is that the base rate is our prior belief that $\ell(x) = 1$, before we see the output of the classifier. The positive predictive value is our posterior belief that $\ell(x) = 1$, after we see the output of the classifier. And all we need to know about the classifier in order to apply Bayes rule to derive our posterior from our prior is its false positive rate and its false negative rate --- these fully characterize the "strength of the evidence." Hence: "If our prior probabilities differ, and we see evidence of a positive label of the same strength, then our posterior probabilities will differ as well."

Once you realize this, then you can generalize the fairness impossibility result to other settings by making equally obvious statements about probability elsewhere. :-)

For example, suppose we generalize the labels to be real valued instead of binary --- so when making decisions, we can model individuals using shades of gray. (e.g. in college admissions, we don't have to model individuals as "qualified" or not, but rather can model talent as a real value.) Lets fix a model for concreteness, but the particulars are not important. (The model here is related to my paper with Sampath Kannan and Juba Ziani on the downstream effects of affirmative action)

Suppose that in population $i$, labels are distributed according to a Gaussian distribution with mean $\mu_i$: $\ell(x) \sim N(\mu_i, 1)$. For an individual from group $i$, we have a test that gives an unbiased estimator of their label, with some standard deviation $\sigma_i$: $\hat \ell(x) \sim N(\ell(x), \sigma_i)$.

In a model like this, we have analogues of our fairness desiderata in the binary case:

- Analogue of Error Rate Balance: We would like our test to be equally informative about both populations: $\sigma_A = \sigma_B$.

- Analogue of Predictive Parity: Any test score $t$ should induce the same posterior expectation on true labels across populations: $$E_{D_A}[\ell(x) | \hat \ell(x) = t] = E_{D_B}[\ell(x) | \hat \ell(x) = t]$$

Can we satisfy both of these conditions at the same time? Because the normal distribution is self conjugate (that's why we chose it!) Bayes Rule simplifies to have a nice closed form, and we can compute our posteriors as follows:

$$E_{D_i}[\ell(x) | \hat \ell(x) = t] = \frac{\sigma_i^2}{\sigma_i^2 + 1}\cdot \mu_i + \frac{1}{\sigma_i^2 + 1}\cdot t$$

So there are only two ways we can achieve both properties:

- We can of course satisfy both conditions if the prior distributions are the same for both groups: $\mu_A = \mu_B$. Then we can set $\sigma_A = \sigma_B$ and observe that the right hand side of the above expression is identical for $i \in \{A, B\}$.

- We can also satisfy both conditions if the prior means are different, but the signal is perfect: i.e. $\sigma_A = \sigma_B = 0$. (Then both posterior means are just $t$, independent of the prior means).

So the mathematical fact is simple --- but its implications remain deep. It means we have to choose between equalizing a decision maker's posterior about the label of an individual, or providing an equally accurate signal about each individual, and that we cannot have both. Unfortunately, living without either one of these conditions can lead to real harm.

Saturday, January 26, 2019

Algorithmic Unfairness Without Any Bias Baked In

Discussion of (un)fairness in machine learning hit mainstream political discourse this week, when Representative Alexandria Ocasio-Cortez discussed the possibility of algorithmic bias, and was clumsily "called out" by Ryan Saavedra on twitter:

It was gratifying to see the number of responses pointing out how wrong he was --- awareness of algorithmic bias has clearly become pervasive! But most of the pushback focused on the possibility of bias being "baked in" by the designer of the algorithm, or because of latent bias embedded in the data, or both:

Bias in the data is certainly a problem, especially when labels are gathered by human beings. But its far from being the only problem. In this post, I want to walk through a very simple example in which the algorithm designer is being entirely reasonable, there are no human beings injecting bias into the labels, and yet the resulting outcome is "unfair".

Here is the (toy) scenario -- the specifics aren't important. High school students are applying to college, and each student has some innate "talent" $I$, which we will imagine is normally distributed, with mean 100 and standard deviation 15: $I \sim N(100,15)$. The college would like to admit students who are sufficiently talented --- say one standard deviation above the mean (so, it would like to admit students with $I \geq 115$). The problem is that talent isn't directly observable. Instead, the college can observe grades $g$ and SAT scores $s$, which are a noisy estimate of talent. For simplicity, lets imagine that both grades and SAT scores are independently and normally distributed, centered at a student's talent level, and also with standard deviation 15: $g \sim N(I, 15)$, $s \sim N(I, 15)$.

In this scenario, the college has a simple, optimal decision rule: It should run a linear regression to try and predict student talent from grades and SAT scores, and then it should admit the students whose predicted talent is at least 115. This is indeed "driven by math" --- since we assumed everything was normally distributed here, this turns out to correspond to the Bayesian optimal decision rule for the college.

Ok. Now lets suppose there are two populations of students, which we will call Reds and Blues. Reds are the majority population, and Blues are a small minority population --- the Blues's only make up about 1% of the student body. But the Reds and the Blues are no different when it comes to talent: they both have the same talent distribution, as described above. And there is no bias baked into the grading or the exams: both the Reds and the Blues also have exactly the same grade and exam score distributions, as described above.

But there is one difference: the Blues have a bit more money than the Reds, so they each take the SAT twice, and report only the highest of the two scores to the college. This results in a small but noticeable bump in their average SAT scores, compared to the Reds. Here are the grades and exam scores for the two populations, plotted:

So what is the effect of this when we use our reasonable inference procedure? First, lets consider what happens when we learn two different regression models: one for the Blues, and a different one for the Reds. We don't see much difference:

The Red classifier makes errors approximately 11% of the time. The Blue classifier does about the same --- it makes errors about 10.4% of the time. This makes sense: the Blues artificially inflated their SAT score distribution without increasing their talent, and the classifier picked up on this and corrected for it. In fact, it is even a little more accurate!

And since we are interested in fairness, lets think about the false negative rate of our classifiers. "False Negatives" in this setting are the people who are qualified to attend the college ($I > 115$), but whom the college mistakenly rejects. These are really the people who have come to harm as a result of the classifier's mistakes. And the False Negative Rate is the probability that a randomly selected qualified person is mistakenly rejected from college --- i.e. the probability that a randomly selected student is harmed by the classifier. We should want that the false negative rates are approximately equal across the two populations: this would mean that the burden of harm caused by the classifier's mistakes is not disproportionately borne by one population over the other. This is one reason why the difference between false negative rates across different populations has become a standard fairness metric in algorithmic fairness --- sometimes referred to as "equal opportunity."

So how do we fare on this metric? Not so badly! The Blue model has a false negative rate of 50% on the blues, and the Red model has a false negative rate of 47% on the reds --- so the difference between these two is a satisfyingly small 3%.

But you might reasonably object: because we have learned separate models for the Blues and the Reds, we are explicitly making admissions decisions as a function of a student's color! This might sound like a form of discrimination, baked in by the algorithm designer --- and if the two populations represent e.g. racial groups, then its explicitly illegal in a number of settings, including lending.

So what happens if we don't allow our classifier to see group membership, and just train one classifier on the whole student body? The gap in false negative rates between the two populations balloons to 12.5%, and the overall error rate ticks up. This means if you are a qualified member of the Red population, you are substantially more likely to be mistakenly rejected by our classifier than if you are a qualified member of the Blue population.

What happened? There wasn't any malice anywhere in this data pipeline. Its just that the Red population was much larger than the Blue population, so when we trained a classifier to minimize its average error over the entire student body, it naturally fit the Red population --- which contributed much more to the average. But this means that the classifier was no longer compensating for the artificially inflated SAT scores of the Blues, and so was making a disproportionate number of errors on them --- all in their favor.

This is the kind of thing that happens all the time: whenever there are two populations that have different feature distributions, learning a single classifier (that is prohibited from discriminating based on population) will fit the bigger of the two populations, simply because they contribute more to average error. Depending on the nature of the distribution difference, this can be either to the benefit or the detriment of the minority population. And not only does this not involve any explicit human bias, either on the part of the algorithm designer or the data gathering process, it is exacerbated if we artificially force the algorithm to be group blind. Well intentioned "fairness" regulations prohibiting decision makers form taking sensitive attributes into account can actually make things less fair and less accurate at the same time.

Socialist Rep. Alexandria Ocasio-Cortez (D-NY) claims that algorithms, which are driven by math, are racist pic.twitter.com/X2veVvAU1H — Ryan Saavedra (@RealSaavedra) January 22, 2019

You know algorithms are written by people right? And that the data they are trained on is made and selected by people? And that the problems algorithms solve are decided...again...by people? And that people can be and many times are racist? Ok now you do the math— kade (@onekade) January 22, 2019

In this scenario, the college has a simple, optimal decision rule: It should run a linear regression to try and predict student talent from grades and SAT scores, and then it should admit the students whose predicted talent is at least 115. This is indeed "driven by math" --- since we assumed everything was normally distributed here, this turns out to correspond to the Bayesian optimal decision rule for the college.

Ok. Now lets suppose there are two populations of students, which we will call Reds and Blues. Reds are the majority population, and Blues are a small minority population --- the Blues's only make up about 1% of the student body. But the Reds and the Blues are no different when it comes to talent: they both have the same talent distribution, as described above. And there is no bias baked into the grading or the exams: both the Reds and the Blues also have exactly the same grade and exam score distributions, as described above.

But there is one difference: the Blues have a bit more money than the Reds, so they each take the SAT twice, and report only the highest of the two scores to the college. This results in a small but noticeable bump in their average SAT scores, compared to the Reds. Here are the grades and exam scores for the two populations, plotted:

The Red classifier makes errors approximately 11% of the time. The Blue classifier does about the same --- it makes errors about 10.4% of the time. This makes sense: the Blues artificially inflated their SAT score distribution without increasing their talent, and the classifier picked up on this and corrected for it. In fact, it is even a little more accurate!

And since we are interested in fairness, lets think about the false negative rate of our classifiers. "False Negatives" in this setting are the people who are qualified to attend the college ($I > 115$), but whom the college mistakenly rejects. These are really the people who have come to harm as a result of the classifier's mistakes. And the False Negative Rate is the probability that a randomly selected qualified person is mistakenly rejected from college --- i.e. the probability that a randomly selected student is harmed by the classifier. We should want that the false negative rates are approximately equal across the two populations: this would mean that the burden of harm caused by the classifier's mistakes is not disproportionately borne by one population over the other. This is one reason why the difference between false negative rates across different populations has become a standard fairness metric in algorithmic fairness --- sometimes referred to as "equal opportunity."

So how do we fare on this metric? Not so badly! The Blue model has a false negative rate of 50% on the blues, and the Red model has a false negative rate of 47% on the reds --- so the difference between these two is a satisfyingly small 3%.

But you might reasonably object: because we have learned separate models for the Blues and the Reds, we are explicitly making admissions decisions as a function of a student's color! This might sound like a form of discrimination, baked in by the algorithm designer --- and if the two populations represent e.g. racial groups, then its explicitly illegal in a number of settings, including lending.

So what happens if we don't allow our classifier to see group membership, and just train one classifier on the whole student body? The gap in false negative rates between the two populations balloons to 12.5%, and the overall error rate ticks up. This means if you are a qualified member of the Red population, you are substantially more likely to be mistakenly rejected by our classifier than if you are a qualified member of the Blue population.

What happened? There wasn't any malice anywhere in this data pipeline. Its just that the Red population was much larger than the Blue population, so when we trained a classifier to minimize its average error over the entire student body, it naturally fit the Red population --- which contributed much more to the average. But this means that the classifier was no longer compensating for the artificially inflated SAT scores of the Blues, and so was making a disproportionate number of errors on them --- all in their favor.

|

| The combined admissions rule takes everyone above the black line. Since the Blues are shifted up relative to the Reds, they are admitted at a disproportionately higher rate. |

This is the kind of thing that happens all the time: whenever there are two populations that have different feature distributions, learning a single classifier (that is prohibited from discriminating based on population) will fit the bigger of the two populations, simply because they contribute more to average error. Depending on the nature of the distribution difference, this can be either to the benefit or the detriment of the minority population. And not only does this not involve any explicit human bias, either on the part of the algorithm designer or the data gathering process, it is exacerbated if we artificially force the algorithm to be group blind. Well intentioned "fairness" regulations prohibiting decision makers form taking sensitive attributes into account can actually make things less fair and less accurate at the same time.

Thursday, January 10, 2019

2019 SIGecom Dissertation Award: Call for Nominations

Dear all,

Please consider nominating graduating Ph.D. students for the SIGecom Dissertation Award. If you are a graduating student, consider asking your adviser or other senior mentor to nominate you.

Nominations are due on February 28, 2019. This award is given to a student who defended a thesis in 2018. It is a prestigious award and is accompanied by a $1500 prize. In the past, the grand prize has been awarded to:

2017: Aviad Rubinstein, "Hardness of Approximation Between P and NP"

2016: Peng Shi, "Prediction and Optimization in School Choice"

2015: Inbal Talgam-Cohen, "Robust Market Design: Information and Computation "

2014: S. Matthew Weinberg, "Algorithms for Strategic Agents"

2013: Balasubramanian Sivan, "Prior Robust Optimization"

And the award has had seven runner-ups: Rachel Cummings, Christos Tzamos, Bo Waggoner, James Wright, Xi (Alice) Gao, Yang Cai, and Sigal Oren. You can find detailed information about the nomination process at: http://www.sigecom.org/awardd.html. We look forward to reading your nominations!

Your Award Committee,

Renato Paes Leme

Aaron Roth (Chair)

Inbal Talgam-Cohen

Please consider nominating graduating Ph.D. students for the SIGecom Dissertation Award. If you are a graduating student, consider asking your adviser or other senior mentor to nominate you.

Nominations are due on February 28, 2019. This award is given to a student who defended a thesis in 2018. It is a prestigious award and is accompanied by a $1500 prize. In the past, the grand prize has been awarded to:

2017: Aviad Rubinstein, "Hardness of Approximation Between P and NP"

2016: Peng Shi, "Prediction and Optimization in School Choice"

2015: Inbal Talgam-Cohen, "Robust Market Design: Information and Computation "

2014: S. Matthew Weinberg, "Algorithms for Strategic Agents"

2013: Balasubramanian Sivan, "Prior Robust Optimization"

And the award has had seven runner-ups: Rachel Cummings, Christos Tzamos, Bo Waggoner, James Wright, Xi (Alice) Gao, Yang Cai, and Sigal Oren. You can find detailed information about the nomination process at: http://www.sigecom.org/awardd.html. We look forward to reading your nominations!

Your Award Committee,

Renato Paes Leme

Aaron Roth (Chair)

Inbal Talgam-Cohen

Monday, March 12, 2018

Call for nominations for the SIGecom Dissertation Award

Dear all,

Please consider nominating recently graduated Ph.D. students working in algorithmic game theory/mechanism design/market design for the SIGecom Dissertation Award. If you are a graduating student, consider asking your adviser or other senior mentor to nominate you.

Nominations are due at the end of this month, March 31, 2018. This award is given to a student who defended a thesis in 2017. It is a prestigious award and is accompanied by a $1500 prize. In the past, the grand prize has been awarded to:

2016: Peng Shi, " Prediction and Optimization in School Choice"

2015: Inbal Talgam-Cohen, " Robust Market Design: Information and Computation "

2014: S. Matthew Weinberg, "Algorithms for Strategic Agents"

2013: Balasubramanian Sivan, " Prior Robust Optimization"

and the award has had five runner-ups, Bo Waggoner, James Wright, Xi (Alice) Gao, Yang Cai, and Sigal Oren. You can find detailed information about the nomination process at: http://www.sigecom.org/awardd.Nominations are due at the end of this month, March 31, 2018. This award is given to a student who defended a thesis in 2017. It is a prestigious award and is accompanied by a $1500 prize. In the past, the grand prize has been awarded to:

2016: Peng Shi, " Prediction and Optimization in School Choice"

2015: Inbal Talgam-Cohen, " Robust Market Design: Information and Computation "

2014: S. Matthew Weinberg, "Algorithms for Strategic Agents"

2013: Balasubramanian Sivan, " Prior Robust Optimization"

Sunday, March 04, 2018

How (un)likely is an "intelligence explosion"?

First, Some Background.

A "superintelligent" AI is loosely defined to be an entity that is much better than we are at essentially any cognitive/learning/planning task. Perhaps, by analogy, a superintelligent AI is to human beings as human beings are to Bengal tigers, in terms of general intelligence. It shouldn't be hard to convince yourself that if we were in the company of a superintelligence, then we would be very right to be worried: after all, it is intelligence that allows human beings to totally dominate the world and drive Bengal tigers to near extinction, despite the fact that tigers physiologically dominate humans in most other respects. This is the case even if the superintelligence doesn't have the destruction of humanity as a goal per-se (after all, we don't have it out for tigers), and even if the superintelligence is just an unconscious but super-powerful optimization algorithm. I won't rehash the arguments here (Scott does it better) but it essentially boils down to the fact that it is quite hard to anticipate what the results of optimizing an objective function will be, if the optimization is done over a sufficiently rich space of strategies. And if we get it wrong, and the optimization has some severely unpleasant side-effects? It is tempting to suggest that at that point, we just unplug the computer and start over. The problem is that if we unplug the intelligence, it won't do as well at optimizing its objective function compared to if it took steps to prevent us from unplugging it. So if it's strategy space is rich enough so that it is able to take steps to defend itself, it will. Lots of the most interesting research in this field seems to be about how to align optimization objectives with our own desires, or simply how to write down objective functions that don't induce the optimization algorithm to try and prevent us from unplugging it, while also not incentivizing the algorithm to unplug itself (the corrigibility problem).

Ok. It seems uncontroversial that a hypothetical superintelligence would be something we should take very seriously as a danger. But isn't it premature to worry about this, given how far off it seems to be? We aren't even that good at making product recommendations, let alone optimization algorithms so powerful that they might inadvertently destroy all of humanity. Even if superintelligence will ultimately be something to take very seriously, are we even in a position to productively think about it now, given how little we know about how such a thing might work at a technical level? This seems to be the position that Andrew Ng was taking, in his much quoted statement that (paraphrasing) worrying about the dangers of super-intelligence right now is like worrying about overpopulation on Mars. Not that it might not eventually be a serious concern, but that we will get a higher return investing our intellectual efforts right now on more immediate problems.

The standard counter to this is that super-intelligence might always seem like it is well beyond our current capabilities -- maybe centuries in the future -- until, all of a sudden, it appears as the result of an uncontrollable chain reaction known as an "intelligence explosion", or "singularity". (As far as I can tell, very few people actually think that intelligence growth would exhibit an actual mathematical singularity --- this seems instead to be a metaphor for exponential growth.) If this is what we expect, then now might very well be the time to worry about super-intelligence. The first argument of this form was put forth by British mathematician I.J. Good (of Good-Turing Frequency Estimation!):

“Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.”Scott Alexander summarizes the same argument a bit more quantitatively. In this passage, he is imagining the starting point being a full-brain simulation of Einstein --- except run on faster hardware, so that our simulated Einstein operates at a much faster clock-speed than his historical namesake:

It might, like the historical Einstein, contemplate physics. Or it might contemplate an area very relevant to its own interests: artificial intelligence. In that case, instead of making a revolutionary physics breakthrough every few hours, it will make a revolutionary AI breakthrough every few hours. Each AI breakthrough it makes, it will have the opportunity to reprogram itself to take advantage of its discovery, becoming more intelligent, thus speeding up its breakthroughs further. The cycle will stop only when it reaches some physical limit – some technical challenge to further improvements that even an entity far smarter than Einstein cannot discover a way around.

To human programmers, such a cycle would look like a “critical mass”. Before the critical level, any AI advance delivers only modest benefits. But any tiny improvement that pushes an AI above the critical level would result in a feedback loop of inexorable self-improvement all the way up to some stratospheric limit of possible computing power.

This feedback loop would be exponential; relatively slow in the beginning, but blindingly fast as it approaches an asymptote. Consider the AI which starts off making forty breakthroughs per year – one every nine days. Now suppose it gains on average a 10% speed improvement with each breakthrough. It starts on January 1. Its first breakthrough comes January 10 or so. Its second comes a little faster, January 18. Its third is a little faster still, January 25. By the beginning of February, it’s sped up to producing one breakthrough every seven days, more or less. By the beginning of March, it’s making about one breakthrough every three days or so. But by March 20, it’s up to one breakthrough a day. By late on the night of March 29, it’s making a breakthrough every second.As far as I can tell, this possibility of an exponentially-paced intelligence explosion is the main argument for folks devoting time to worrying about super-intelligent AI now, even though current technology doesn't give us anything even close. So in the rest of this post, I want to push a little bit on the claim that the feedback loop induced by a self-improving AI would lead to exponential growth, and see what assumptions underlie it.

A Toy Model for Rates of Self Improvement

Lets write down an extremely simple toy model for how quickly the intelligence of a self improving system would grow, as a function of time. And I want to emphasize that the model I will propose is clearly a toy: it abstracts away everything that is interesting about the problem of designing an AI. But it should be sufficient to focus on a simple question of asymptotics, and the degree to which growth rates depend on the extent to which AI research exhibits diminishing marginal returns on investment. In the model, AI research accumulates with time: at time t, R(t) units of AI research have been conducted. Perhaps think of this as a quantification of the number of AI "breakthroughs" that have been made in Scott Alexander's telling of the intelligence explosion argument. The intelligence of the system at time t, denoted I(t), will be some function of the accumulated research R(t). The model will make two assumptions:

- The rate at which research is conducted is directly proportional to the current intelligence of the system. We can think about this either as a discrete dynamics, or as a differential equation. In the discrete case, we have: $R(t+1) = R(t) + I(t)$, and in the continuous case: $\frac{dR}{dt} = I(t)$.

- The relationship between the current intelligence of the system and the currently accumulated quantity of research is governed by some function f: $I(t) = f(R(t))$.

The function f can be thought of as capturing the marginal rate of return of additional research on the actual intelligence of an AI. For example, if we think AI research is something like pumping water from a well --- a task for which doubling the work doubles the return --- then, we would model f as linear: $f(x) = x$. In this case, AI research does not exhibit any diminishing marginal returns: a unit of research gives us just as much benefit in terms of increased intelligence, no matter how much we already understand about intelligence. On the other hand, if we think that AI research should exhibit diminishing marginal returns --- as many creative endeavors seem to --- then we would model f as an increasing concave function. For example, we might let $f(x) = \sqrt{x}$, or $f(x) = x^{2/3}$, or $f(x) = x^{1/3}$, etc. If we are really pessimistic about the difficulty of AI, we might even model $f(x) = \log(x)$. In these cases, intelligence is still increasing in research effort, but the rate of increase as a function of research effort is diminishing, as we understand more and more about AI. Note however that the rate at which research is being conducted is increasing, which might still lead us to exponential growth in intelligence, if it increases fast enough.

So how does our choice of f affect intelligence growth rates? First, lets consider the case in which $f(x) = x$ --- the case of no diminishing marginal returns on research investment. Here is a plot of the growth over 1000 time steps in the discrete model:

Here, we see exponential growth in intelligence. (It isn't hard to directly work out that in this case, in the discrete model, we have $I(t) = 2^t$, and in the continuous model, we have $I(t) = e^t$). And the plot illustrates the argument for worrying about AI risk now. Viewed at this scale, progress in AI appears to plod along at unimpressive levels before suddenly shooting up to an unimaginable level: in this case, a quantity if written down as a decimal that would have more than 300 zeros.

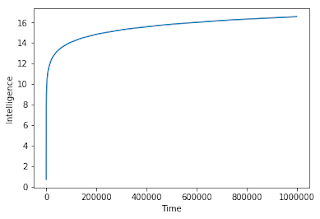

It isn't surprising that if we were to model severely diminishing returns --- say $f(x) = \log(x)$, that this would not occur. Below, we plot what happens when $f(x) = \log(x)$, with time taken out all the way to 1,000,000 rather than merely 1000 as in the above plot:

Intelligence growth is not very impressive here. At time 1,000,000 we haven't even reached 17. If you wanted to reach (say) an intelligence level of 30 you'd have to wait an unimaginably long time. In this case, we definitely don't need to worry about an "intelligence explosion", and probably not even about ever reaching anything that could be called a super-intelligence.

But what about moderate (polynomial) levels of diminishing marginal returns. What if we take $f(x) = x^{1/3}$? Lets see:

Ok --- now we are making more progress, but even though intelligence now has a polynomial relationship to research (and research speed is increasing, in a chain reaction!) the rate of growth in intelligence is still decreasing. What about if $f(x) = \sqrt{x}$? Lets see:

At least now the rate of growth doesn't seem to be decreasing: but it is growing only linearly with time. Hardly an explosion. Maybe we just need to get more aggressive in our modeling. What if $f(x) = x^{2/3}$?

Ok, now we've got something! At least now the rate of intelligence gains is increasing with time. But it is increasing more slowly than a quadratic function -- a far cry from the exponential growth that characterizes an intelligence explosion.

Lets take a break from all of this plotting. The model we wrote down is simple enough that we can just go and solve the differential equation. Suppose we have $f(x) = x^{1-\epsilon}$ for some $\epsilon > 0$. Then the differential equation solves to give us:

\[I(t) = \left(\left(1+\epsilon t \right)^{1/\epsilon} \right)^{1-\epsilon} \]

What this means is that for any positive value of $\epsilon$, in this model, intelligence grows at only a polynomial rate. The only way this model gives us exponential growth is if we take $\epsilon \rightarrow 0$, and insist that $f(x) = x$ --- i.e. that the intelligence design problem does not exhibit any diminishing marginal returns at all.

Thoughts

So what do we learn from this exercise? Of course one can quibble with the details of the model, and one can believe different things about what form for the function f best approximates reality. But for me, this model helps crystallize the extent to which the "exponential intelligence explosion" story crucially relies on intelligence design being one of those rare tasks that doesn't exhibit any decreasing marginal returns on effort at all. This seems unlikely to me, and counter to experience.

Of course, there are technological processes out there that do appear to exhibit exponential growth, at least for a little while. Moore's law is the most salient example. But it is important to remember that even exponential growth for a little while need not seem explosive at human time scales. Doubling every day corresponds to exponential growth, but so does increasing by 1% a year. To paraphrase Ed Felten: our retirement plans extend beyond depositing a few dollars into a savings account, and waiting for the inevitable "wealth explosion" that will make us unimaginably rich.

Postscript

I don't claim that anything in this post is either novel or surprising to folks who spend their time thinking about this sort of thing. There is at least one paper that writes down a model including diminishing marginal returns, which yields a linear rate of intelligence growth.

It is also interesting to note that in the model we wrote down, exponential growth is really a knife edge phenomenon. We already observed that we get exponential growth if $f(x) = x$, but not if $f(x) = x^{1-\epsilon}$ for any $\epsilon > 0$. But what if we have $f(x) = x^{1+\epsilon}$ for $\epsilon > 0$? In that case, we don't get exponential growth either! As Hadi Elzayn pointed out to me, Osgood's Test tell us that in this case, the function $I(t)$ contains an actual mathematical singularity --- it approaches an infinite value in finite time.

Wednesday, January 10, 2018

Fairness and The Problem with Exploration: A Smoothed Analysis of the Greedy Algorithm for the Linear Contextual Bandit Problem

Bandit Problems

"Bandit problems" are a common abstraction in machine learning. The name is supposed to evoke the image of slot machines, which are also known as "One-armed bandits" (or so I am told... Somehow nobody speaks like this in the circles I run in.) In the classic formulation, you imagine yourself standing in front of a bank of slot machines, each of which is different and might have a different distribution on payoffs. You can sequentially decide which machine's arm to pull: when you pull the arm, you get a sample from that machine's reward distribution. Your goal is to pull the arms in such a way so that your average reward approaches what it would have been had you played the optimal strategy in hindsight: i.e. always pulled the arm of the machine that had the highest mean reward. The problem is tricky because you don't know the reward distributions to start. The key feature of the problem is that in order to observe a sample from the reward distribution of a particular machine, you actually have to pull that arm and spend a turn to do it.

Fairness Concerns

Our New Paper

"Bandit problems" are a common abstraction in machine learning. The name is supposed to evoke the image of slot machines, which are also known as "One-armed bandits" (or so I am told... Somehow nobody speaks like this in the circles I run in.) In the classic formulation, you imagine yourself standing in front of a bank of slot machines, each of which is different and might have a different distribution on payoffs. You can sequentially decide which machine's arm to pull: when you pull the arm, you get a sample from that machine's reward distribution. Your goal is to pull the arms in such a way so that your average reward approaches what it would have been had you played the optimal strategy in hindsight: i.e. always pulled the arm of the machine that had the highest mean reward. The problem is tricky because you don't know the reward distributions to start. The key feature of the problem is that in order to observe a sample from the reward distribution of a particular machine, you actually have to pull that arm and spend a turn to do it.

There are more complicated variants of this kind of problem, in which your choices may vary from round to round, but have differentiating features. For example, in the linear contextual bandit problem, in every round, you are presented with a number of choices, each of which is represented by some real valued vector, which may be different from round to round. You then get to choose one of them. The reward you get, corresponding to the action you choose, is random --- but its expectation is equal to some unknown linear function of the action's features. The key property of the problem is that you only get to observe the reward of the action you choose. You do not get to observe the reward you would have obtained had you chosen one of the other actions --- this is what makes it a bandit problem. The goal again is to choose actions such that your average reward approaches what it would have been had you played the optimal strategy in hindsight. In this case, with full knowledge of the underlying linear functions, the optimal strategy would simply compute the expected reward of each action, and play the action with highest expected reward (this may now be a different action at each round, since the features change). Again, the problem is tricky because you don't know the linear functions which map features to expected reward.

Bandit problems are characterized by the tension between exploration and exploitation. At any given moment, the algorithm has some guess as to what the best action is. (In a linear contextual bandit problem, for example, the algorithm might just run least-squares regression on the examples it has observed rewards for: the point predictions of the regression estimate on the new actions are these best guesses). In order to be able to compete with the optimal policy, the algorithm definitely needs to (eventually) play the action it thinks is the best one, a lot of the time. This is called exploitation: the algorithm is exploiting its knowledge in order to do well. However, since the algorithm does not observe the rewards for actions it does not play, it also will generally need to explore. Consider someone in front of those slot machines: if he has played each machine once, he has some weak beliefs about the payoff distribution for each machine. But those beliefs have resulted from just a single sample, so they may well be very wrong. If he just forever continues to play the machine that has the highest empirical reward, he might play the same machine every day, and he will never learn more about the other machines --- even though one of them might have turned out to really have higher average reward. He has fooled himself, and because he never explores, he continues to be wrong forever. So optimal algorithms have to carefully balance exploration and exploitation in order to do well.

There are various clever schemes for trading off exploration and exploitation, but the simplest one is called epsilon-Greedy. At every round, the algorithm flips a coin with bias epsilon. If the coin comes up heads (with probability 1-epsilon), the algorithm exploits: it just greedily chooses the action that it estimates to be best. If the coin comes up tails (with probability epsilon), the algorithm explores: it chooses an action uniformly at random. If you set epsilon appropriately, in many settings, you have the guarantee that the average reward of the algorithm will approach the average reward of the optimal policy, as time goes on. Fancier algorithms for bandit problems more smoothly interpolate between exploration and exploitation: but all of them inevitably have to manage this tradeoff.

Fairness Concerns

(Contextual) bandit problems are not just an interesting mathematical curiosity, but actually arise all the time in important applications. Here are just a few:

- Lending: Banks (and increasingly algorithms) consider applicants for loans. They can look at applicant features, and observe the repayment history of applicants they grant loans to, but they cannot observe counterfactuals: would an applicant not given a loan have defaulted if he had been given the loan?

- Parole Decisions: Judges (and increasingly algorithms) consider inmates for parole. In part, they want to predict which inmates will go on to re-offend if released, and not release those inmates. But it is not possible to observe the counterfactual: would an inmate who was not released have gone on to commit a crime if he had been released?

- Predictive Policing: Police chiefs (and increasingly algorithms) consider where in their city they want to deploy their police. In part, they want to predict where crimes will occur, so they can deploy a heavier police presence in those areas. But they also disproportionately observe crimes in the areas in which police are deployed.

- Sequential Clinical Trials: Certain kinds of drugs affect patients differently, depending on their genetic markers. But for a new drug, the relationship might be unknown. As patients show up, they can be assigned to different clinical trials, corresponding to different drugs --- but we cannot observe the counterfactual: how would a patient have reacted to a drug she was not given?

I picked these four examples (rather than the more commonly used example of ad targeting) because in each of the above examples, the decision made by the algorithm has an important effect on someone's life. This raises issues of fairness, and as we will see, these fairness questions relate to exploration in at least two different ways.

First, there is an issue that was recently raised by Bird, Barocas, Crawford, Diaz, and Wallach: that exploring can be unfair to the individuals who have the misfortune of being present during the exploration rounds. Consider the example of sequential clinical trials: if a patient shows up on an "exploitation" round, then she is given the treatment that is believed to be the best for her, using a best effort estimate given the information available. But if she shows up during an "exploration" round, she will be given a random treatment --- generally, one that current evidence suggests is not the best for her. Is this fair to her? What if her life depends on the treatment? Exploration is explicitly sacrificing her well-being, for the possible good of future individuals, who might be able to benefit from what we learned from the exploration. It can be that when we are dealing with important decisions about peoples lives, we don't want to sacrifice the welfare of an individual for the good of others. Certainly, our notional patient would prefer not to show up on an exploration round. In various other settings, we can also imagine exploration being repugnant, even though it is in principle necessary for learning. Can we (for example) randomly release inmates on parole, even if we believe them to be high risks for committing more violent crimes?

There is another, conflicting concern: if we do not explore, we might not correctly learn about the decisions we have to make. And there are a number of reasons we might expect to see insufficient exploration. Exploration is necessary to maximize long-term reward, but decision makers might be myopic. For example, the loan officers actually making lending decisions might be more interested in maximizing their annual bonuses than maximizing the long-term profits of the bank. Even the CEO might be more interested in quarterly share prices. But myopic decision makers won't explore (tangent: We had a paper at EC thinking about how one might incentivize myopic decision makers to nevertheless be meritocratically fair). The algorithms in use in many settings might also not have been designed properly --- if our learning algorithms don't explicitly take into account the bandit nature of the problem, and just treat it as a supervised classification problem, then they won't explore --- and this kind of mistake is probably extremely common. And finally, as we argued above, we might believe that it is simply not "fair" to explore, and so we intentionally avoid it. But a lack of exploration (and the resulting failure to properly learn) can itself be a source of unfairness. The "feedback loops" that result from a lack of exploration have been blamed by Lum and Isaac and by Ensign, Friedler, Neville, Scheidegger, and Venkatasubramanian as a primary source of unfairness in predictive policing. (See Suresh's recent blog post). Such algorithms can over-estimate crime in the poor neighborhoods in which police are deployed, and underestimate crime in the rich neighborhoods. If they don't explore to correct these mis-estimates, they will deploy more police to the poor neighborhoods, and fewer to the rich neighborhoods, which only exacerbates the data collection problem.

Our New Paper

These two concerns both lead us to wonder how bad things need be if a decision maker doesn't explore, and instead simply runs a greedy algorithm, that exploits at every round. Maybe this is because we believe that greedy algorithms are already being run in many cases, and we want to know how much of a risk we are at for developing pernicious feedback loops. Or maybe we want to run a greedy algorithm by design, because we are in a medical or legal setting in which exploration is unacceptable. This is the question we consider in a new paper, joint with Sampath Kannan, Jamie Morgenstern, Bo Waggoner, and Steven Wu. (P.S. Bo is on the job market right now!)

Specifically, we consider the linear contextual bandit problem, described at the beginning of this post. A decision maker must choose amongst a set of actions every day, each of which is endowed with a vector of features. The reward distribution for each action is governed by an unknown linear function of the features. In this case, the greedy algorithm is simply the algorithm that maintains ordinary least squares regression estimates for each action, and always plays the action that maximizes its current point prediction of the reward, using its current regression estimate.

Motivated in part by the problem with exploration in sequential drug trials, Bastani, Bayati, and Khosravi previously studied a two-action variant of this problem, and showed that when the features are drawn stochastically from a distribution satisfying certain strong assumptions, then the greedy algorithm works well: its reward approaches that of the optimal policy, without needing exploration! We take their result as inspiration, and prove a theorem of this flavor under substantially weaker conditions (although our model is slightly different, so the results are technically incomparable).

We consider a model in which the number of actions can be arbitrary, and give a smoothed analysis. What that means is we don't require that the actions or their features are drawn from any particular distribution. Instead, we let them be chosen by an adversary, who knows exactly how our algorithm works, and can be arbitrarily sneaky in his attempt to cause our algorithm to fail to learn. Of course, if we stopped there, then the result would be that the greedy algorithm can be made to catastrophically fail to learn with constant probability: it has long been known that exploration is necessary in the worst case. But our adversary has a slightly shaky hand. After he chooses the actions for a given round, the features of those actions are perturbed by a small amount of Gaussian noise, independently in each coordinate. What we show is that this tiny amount of noise is sufficient to cause the greedy algorithm to succeed at learning: it recovers regret bounds (regret is the difference between the cumulative reward of the greedy algorithm, and what the optimal policy would have done) that are close to optimal, up to an inverse polynomial factor of the standard deviation of the Gaussian noise. (The regret bound must grow with the inverse of the standard deviation of the noise in any smoothed analysis, since we know that if there is no noise, the greedy algorithm doesn't work...)

The story is actually more nuanced: we consider two different models, derive qualitatively different bounds in each model, and prove a separation: see the paper for details. But the punchline is that, at least in linear settings, what we can show is that "generically" (i.e. in the presence of small perturbations), fast learning is possible in bandit settings, even without exploration. This can be taken to mean that in settings in which exploration is repugnant, we don't have to do it --- and that perhaps pernicious feedback loops that can arise when we fail to explore shouldn't be expected to persist for a long time, if the features we observe are a little noisy. Of course, everything we prove is in a linear setting. We still don't know the extent to which these kinds of smoothed analyses carry over into non-linear settings. Understanding the necessity of exploration in more complex settings seems like an important problem with real policy implications.

Sunday, December 17, 2017

Adaptive Data Analysis Class Notes

Adam Smith and I both taught a PhD seminar this semester (at BU and Penn respectively) on adaptive data analysis. We collaborated on the course notes, which can be found here: https://adaptivedataanalysis.com/lecture-schedule-and-notes/

As part of their final project, students will be writing lecture notes for papers that we didn't have time to cover (listed here: https://adaptivedataanalysis.com/more-reading/ ). I'll post those as they are turned in.

Wednesday, November 15, 2017

Between "statistical" and "individual" notions of fairness in Machine Learning

If you follow the fairness in machine learning literature, you might notice after awhile that there are two distinct families of fairness definitions:

- Statistical definitions of fairness (sometimes also called group fairness), and

- Individual notions of fairness.

The statistical definitions are far-and-away the most popular, but the individual notions of fairness offer substantially stronger semantic guarantees. In this post, I want to dive into this distinction a little bit. Along the way I'll link to some of my favorite recent fairness papers, and end with a description of a recent paper from our group which tries to get some of the best properties of both worlds.

Statistical Definitions of Fairness

At a high level, statistical definitions of fairness partition the world into some set of "protected groups", and then ask that some statistical property be approximately equalized across these groups. Typically, the groups are defined via some high level sensitive attribute, like race or gender. Then, statistical definitions of fairness ask that some relevant statistic about a classifier be equalized across those groups. For example, we could ask that the rate of positive classification be equal across the groups (this is sometimes called statistical parity). Or, we could ask that the false positive and false negative rates be equal across the groups (This is what Hardt, Price, and Srebro call "Equalized Odds"). Or, we could ask that the positive predictive value (also sometimes called calibration) be equalized across the groups. These are all interesting definitions, and there are very interesting impossibility results that emerge if you think you might want to try and satisfy all of them simultaneously.

These definitions are attractive: first of all, you can easily check whether a classifier satisfies them, since verifying that these fairness constraints hold on a particular distribution simply involves estimating some expectations, one for each protected group. It can be computationally hard to find a classifier that satisfies them, while minimizing the usual convex surrogate losses --- but computational hardness pervades all of learning theory, and hasn't stopped the practice of machine learning. Already there are a number of practical algorithms for learning classifiers subject to these statistical fairness constraints --- this recent paper by Agarwal et al. is one of my favorites.

Semantics

But what are the semantics of these constraints, and why do they correspond to "fairness"? You can make a different argument for each one, but here is the case for equalizing false positive and negative rates across groups (equalized odds), which is one of my favorites:

You can argue (making the big assumption that the data is not itself already corrupted with bias --- see this paper by Friedler et al. for a deep dive into these assumptions...) that a classifier potentially does harm to an individual when it misclassifies them. If the (say) false positive rate is higher amongst black people than white people, then this means that if you perform the thought experiment of imagining yourself as a uniformly random black person with a negative label, the classifier is more likely to harm you than if you imagine yourself as a uniformly random white person with a negative label. On the other hand, if the classifier equalizes false positive and negative rates across populations, then behind the "veil of ignorance", choosing whether you want to be born as a uniformly random black or white person (conditioned on having a negative label), all else being equal, you should be indifferent!

Concerns

However, in this thought experiment, it is crucial that you are a uniformly random individual from the population. If you know some fact about yourself that makes you think you are not a uniformly random white person, the guarantee can lack bite. Here is a simple example:

Suppose we have a population of individuals, with two features and a label. Each individual has a race (either black or white) and a gender (either male or female). The features are uniformly random and independent. Each person also has a label (positive or negative) that is also uniformly random, and independent of the features. We can define four natural protected groups: "Black people', "White people", "Men", and "Women".

Now suppose we have a classifier that labels an individual as positive if and only if they are either a black man or a white woman. Across these four protected groups, this classifier appears to be fair: the false positive rate and false negative rate are all exactly 50% on all four of the protected groups, so it satisfies equalized odds. But this hides a serious problem: on two subgroups (black men and white women), the false positive rate is 100%! If being labelled positive is a bad thing, you would not want to be a random (negative) member of those sub-populations.

Of course, we could remedy this problem by simply adding more explicitly protected subgroups. But this doesn't scale well: If we have d protected binary features, there are 2^(2^d) such subgroups that we can define. If the features are real valued, things are even worse, and we quickly get into issues of overfitting (see the next section).

Individual Definitions of Fairness

At a high level, the problem with statistical definitions of fairness is that they are defined with respect to group averages, so if you are not an "average" member of your group, they don't promise you anything. Individual definitions of fairness attempt to remedy this problem by asking for a constraint that binds at the individual level. The first attempt at this was in the seminal paper "Fairness Through Awareness", which suggested that fairness should mean that "similar individuals should be treated similarly". Specifically, if the algorithm designer knows the correct metric by which individual similarity should be judged, the constraint requires that individuals who are close in that metric should have similar probabilities of any classification outcome. This is a semantically very strong definition. It hasn't gained much traction in the literature yet, because of the hard problem of specifying the similarity metric. But I expect that it will be an important definition going forward --- there are just some obstacles that have to be overcome. I've been thinking about it a lot myself.

Another definition of individual fairness is one that my colleagues and I at Penn introduced in this paper, which we have taken to calling "Weakly Meritocratic Fairness". I have previously blogged about it here. We define it in a more general context, and in an online setting, but it makes sense to think about what it means in the special case of batch binary classification as well. In that case, it reduces to three constraints:

- For any two individuals with a negative label, the false positive rate on those individuals must be equal

- For any two individuals with a positive label, the false negative rate on those individuals must be equal

- For any pair of individuals A and B such that A has a negative label, and B has a positive label, the true positive rate on B can't be lower than the false positive rate on A. (Assuming that positive is the more desirable label --- otherwise this is reversed).

Again, we are talking about false positive rates --- but now there is no distribution --- i.e. nothing to take an average over. Instead, the constraints bind on every pair of individuals. As a result, the "rate" we are talking about is calculated only over the randomness of the classifier itself.

This constraint in particular implies that false positive rates are equal across any sub-population that you might define, even ex-post! Problem solved! Why don't we all just use this definition of fairness? Here is why:

Concerns:

Weakly meritocratic fairness is extremely similar (identical, except for the added condition 3) to asking for equalized odds fairness on the (potentially infinitely large) set of "protected groups" corresponding to singletons --- everyone forms their own protected group! As a result, you shouldn't hope to be able to satisfy a fairness definition like this without making some assumption on the data generating process. Even if on your sample of data, it looks like you are satisfying this definition of fairness, you are overfitting --- the complexity of this class of groups is too large, and your in-sample "fairness" won't generalize out of sample. If you are willing to make assumptions on the data generating process, then you can do it! In our original paper, we show how to do it if you assume the labels obey a linear relationship to the features. But assumptions of this sort generally won't hold exactly in practice, which makes this kind of approach difficult to implement in practical settings.

The "Fairness Through Awareness" definition at a high level suffers from the same kind of problem: you can only use it if you make a very strong assumption (namely that you have what everyone agrees is the "right" fairness metric). And this is the crux of the problem with definitions of individual fairness: they seem to require such strong assumptions, that we cannot actually realize them in practice.

A Middle Ground

Asking for statistical fairness across a small number of coarsely defined groups leaves us open to unfairness on structured subgroups we didn't explicitly designate as protected (what you might call "Fairness Gerrymandering"). On the other hand, asking for statistical fairness across every possible division of the data that can be defined ex-post leaves us with an impossible statistical problem. (Consider, that every imperfect classifier could be accused of being "unfair" to the subgroup that we define, ex-post, to be the set of individuals that the classifier misclassifies! But this is just overfitting...)

What if we instead ask for statistical fairness across exponentially many (or infinitely many) subgroups, defined over a set of features we think should be protected, but ask that this family of subgroups itself has bounded VC-dimension? This mitigates the statistical problem --- we can now in principle train "fair" classifiers according to this definition without worrying about overfitting, assuming we we have enough data (proportional to the VC-dimension of the set of groups we want to protect, and the set of classifiers we want to learn). And we can do this without making any assumptions about the data. It also mitigates the "Fairness Gerrymandering" problem --- at least now, we can explicitly protect an enormous number of detailed subgroups, not just coarsely defined ones.

This is what we (Michael Kearns, Seth Neel, Steven Wu, and I) propose in our new paper. In most of the paper, we investigate the computational challenges surrounding this kind of fairness constraint, when the statistical fairness notion we want is equality of false positive rates, false negative rates, or classification rates (statistical parity):

- First, it is no longer clear how to even check whether a fixed classifier satisfies a fairness constraint of this sort, without explicitly enumerating all of the protected groups (and recall, there might now be exponentially or even uncountably infinitely many such subgroups). We call this the Auditing problem. And indeed, in the worst case, this is a hard problem: we show that it is equivalent to weak agnostic learning, which brings with it a long list of computational hardness results from learning theory. It is hard to audit even for simple subgroup structures, definable by boolean conjunctions over features, or linear threshold functions. However, this connection also suggests an algorithmic approach to auditing. The fact that learning linear separators is NP hard in the worst case hasn't stopped us from doing this all the time, with simple heuristics like logistic regression and SVMs, as well as more sophisticated techniques. These same heuristics can be used to solve the auditing problem.

- Going further, lets suppose those heuristics work --- i.e. we have oracles which can optimally solve agnostic learning problems over some collection of classifiers C, and can optimally solve the auditing problem over some class of subgroups G. Then we give an algorithm that only has to maintain small state, and provably converges to the optimal distribution over classifiers in C that equalizes false positive rates (or false negative rates, or classification rates...) over the groups G. Our algorithm draws inspiration from the Agarwal et al. paper: "A Reductions Approach to Fair Classification".

- And we can run these algorithms! We use a simple linear regression heuristic to implement both the agnostic learning and auditing "oracles", and run the algorithm to learn a distribution over linear threshold functions (defined over 122 attributes) that approximately equalizes false positive rates across every group definable by a linear threshold function over 18 real valued racially associated attributes, in the "Communities and Crime" dataset. It seems to work and do well!

In Conclusion...

I've long been bothered by the seemingly irreconcilable gap between individual and group notions of fairness. The individual notions of fairness have the right semantics, but they are unimplementable. The group notions of fairness promise something too weak. Going forward, I think these two schools of thought on fairness have to be reconciled. I think of our work as a small step in that direction.

Michael recorded a short talk about this work, which you can watch on YouTube: https://www.youtube.com/watch?v=AjGQHJ0FKMg

Monday, October 16, 2017

Call for Nominations for the SIGecom Doctoral Dissertation Award

The SIGecom Doctoral Dissertation Award recognizes an outstanding dissertation in the field of economics and computer science. The award is conferred annually at the ACM Conference on Economics and Computation and includes a plaque, complimentary conference registration, and an honorarium of $1,500. A plaque may further be given to up to two runners-up. No award may be conferred if the nominations are judged not to meet the standards for the award.

To be eligible, a dissertation must be on a topic related to the field of economics and computer science and must have been defended successfully during the calendar year preceding the year of the award presentation.

The next SIGecom Doctoral Dissertation Award will be given for dissertations defended in 2017. Nominations are due by the March 31, 2018, and must be submitted by email with the subject "SIGecom Doctoral Dissertation Award" to the awards committee at sigecom-awards-diss@acm.org

Nominations may be made by any member of SIGecom, and will typically come from the dissertation supervisor. Self-nomination is not allowed. Nominations for the award must include the following, preferably in a single PDF file:

1. A two-page summary of the dissertation, written by the nominee, including bibliographic data and links to publicly accessible versions of published papers based primarily on the dissertation.

2. An English-language version of the dissertation.

3. An endorsement letter of no more than two pages by the nominator, arguing the merit of the dissertation, potential impact, and justification of the nomination. This document should also certify the dissertation defense date.

4. The names, email addresses, and affiliations of at least two additional endorsers.

The additional endorsement letters themselves should be emailed directly to sigecom-awards-diss@acm.org

It is expected that a nominated candidate, if selected for the award, will attend the next ACM Conference on Economics and Computation to accept the award and give a presentation on the dissertation work. The cost of attending the conference is not covered by the award, but complimentary registration is provided.

To be eligible, a dissertation must be on a topic related to the field of economics and computer science and must have been defended successfully during the calendar year preceding the year of the award presentation.

The next SIGecom Doctoral Dissertation Award will be given for dissertations defended in 2017. Nominations are due by the March 31, 2018, and must be submitted by email with the subject "SIGecom Doctoral Dissertation Award" to the awards committee at sigecom-awards-diss@acm.org